System Development Life Cycle (SDLC)

System Development Life Cycle (SDLC)

System Development Life Cycle—Steps in Analysis and Design

The purpose of this chapter is to build on the Tiers of Software Development and to provide a framework for the life cycle of most software development projects. This is important prior to explaining the details of the user interface and analysis tools that are needed to bring software to fruition. Another way of viewing this chapter then is to get a sense of how the tiers of development actually interface with each other and what specific events and tools are used to successfully complete each step. This chapter consists of two sections: the first explains the notion that software goes through three basic phases or cycles, that is, Development, Testing, and Production. The second section provides an example using a seven-stage method called “The Barker Method,” which represents one approach to defining the details of each of the three cycles.

No matter which methodology might be used when designing a system including its related database, the key elements involved in the methodology usually include, at a minimum, business process reengineering and the life cycle for design and implementation. Business process reengineering (BPR), simply defined, is the process used for either reworking an existing application or database to make improvements, or to account for new business require- ments. BPR will be discussed in more detail in Chapter 13. The life cycle of the database includes all steps (and environments) necessary to assist in the database’s design and final implementation and its integration with application programs. Irrespective of which design methodology is used, analysts/designers will find that system development projects will usually include the following generic steps:

1. Determine the need for a system to assist a business process

2. Define that system’s goals

3. Gather business requirements

4. Convert business requirements to system requirements

5. Design the database and accompanying applications

6. Build, test, and implement the database and applications

This traditional method is the most commonly used design approach and includes at least three primary phases:

1. Requirements analysis

2. Data modeling

3. Normalization

During the first phase, requirements analysis, the development and design team conduct interviews in order to capture all the business needs as related to the proposed system. The data modeling phase consists of the creation of the logical data model that will later be used to define the physical data model, or database structures. After the database has been modeled and designed, the normalization phase is implemented to help eliminate or reduce as much as possible any redundant data. All of the specifics of how this is accomplished will be detailed in the tools of analysis chapters. Below is a more specific description of what activities are included in the Development, Testing, and Production cycles of the SDLC.

Development

The Development life cycle includes four overall components. Using this perspective, “development” would consist of all the necessary steps to accomplish the creation of the application. This includes feasibility, analysis, design, and the actual coding. Feasibility represents the tasks necessary to determine whether the software project makes business sense. Most organizations would integrate the process of Return-On-Investment (ROI) during this step. ROI consists of the financial steps that determine mathematically whether the project will provide the necessary monetary returns to the business. Focusing solely on monetary returns can be a serious pitfall, since there are many benefits that can be realized via non-monetary returns (Langer, 2005). Feasibility often contains what is known as a high-level forecast or budget. The “high” would represent the “worst case” scenario on cost and the “low,” the best case or lowest cost. The hope of course is that the actual cost and timetable would fall somewhere in between the high and the low. But feasibility goes beyond just the budget; it also represents whether the business feels that the project is attainable within a specific timetable as well. So, feasibility is a statement of both financial and business objectives, and an overall belief that the cost is worth the payback.

Analysis is the ultimate step of creating the detailed logical requirements, or as I will define in Chapter 4, the architecture of the applications and database. As we will see in this book, there are numerous analysis tools that are used along each phase of analysis. Ultimately, the analyst creates a requirements document that outlines all of the needs for the coders to work from, without going back to the users directly for clarification. Analysis, as an architectural responsibility is very much based on a mathematical progression of predictable steps. These steps are quite iterative in nature, which requires practitioners to understand the gradual nature of completion of this vital step in Development. Another aspect of the mathematics of analysis is decomposition. Decomposition as we will see establishes the creation of the smaller components that make up the whole. It is like the bones, blood, and muscles of the human body that ultimately make up what we physically see in a person. Once a system is decomposed, the analyst can be confident that the “parts” that comprise the whole are identified and can be reused throughout the system as necessary. These decomposed parts are called “objects” and comprise the study and application of object-oriented analysis and design. Thus, the basis of working with users ultimately leads to the creation of parts known as objects that act as interchangeable components that can be used whenever needed. One should think of objects like interchangeable parts of a car. They sometimes are called “standard” parts that can be reused in multiple models. The benefits are obvious. Such is the same objective with software: the more reusable the more efficient and cost effective.

Design is far less logical than analysis but a far more creative step. Design is the phase that requires the physical decisions about the system, from what programming language to use, which vendor database to select (Oracle, Sybase, DB2 for example), to how screens and reports will be identified. The design phase can also include decisions about hardware and network communications or the topology. Unlike analysis, design requires less of a mathematical and engineering focus, to one that actually serves the user view. The design process is perhaps the most iterative, which could require multiple sessions with users using a trial and error approach until the correct user interface and product selection has been completed. Design often requires “experts” in database design, screen architecture experts as well as those professionals who understand the performance needs of network servers, and other hardware components required by the system.

Coding represents another architectural as well as mathematical approach. However, I would suggest that mathematics is not the most accurate description of a coding structure, rather it is about understanding how logic operates. This component of mathematics is known as “Boolean” Algebra, or the mathematics of logic. Boolean algebra is the basis of how software communicates with the real machine. Software is the physical abstraction that allows us to talk with the hardware machine. Coding then is the best way to actually develop the structure of the program. Much has been written about coding styles and formats. The best known is called “structured” programming. Structured programming was originally developed so that programmers would create code that would be cohesive, that is, would be self-reliant. Self-reliance in coding means that the program is self-contained because all of the logic relating to its tasks is within the program. The opposite of cohesion is coupling. Coupling is the logic of programs that are reliant on each other, meaning that a change to one program necessitates a change in another program. Coupling is viewed as being dangerous from a maintenance and quality perspective simply because changes cause problems in other reliant or “coupled” systems. More details on the practice of cohesion and coupling are covered in Chapter 11. The relationship to coding to analysis can be critical given that the decision on what code will comprise a module may be determined during analysis as opposed to coding.

Testing

Testing can have a number of components. The first form of testing is called program debugging. Debugging is the process of a programmer ensuring that his/her code in a program executes as designed. Debugging, therefore, should be carried out by the programmer, as opposed to a separate quality assurance group. However, it is important to recognize that debugging does not ensure that the program is performing as required by end users, rather only confirms that the code executes and does not abort during program execution. What does this mean? Simply that a programmer should never pass a program to quality assurance that does not execute, at least showing that it does perform under all conditions. Once again, this process does not ensure that the results produced by the program are correct or meets the original requirements set forth by users.

Once a program has been “debugged” it should then be sent through the quality assurance process. Today, most large organizations recognize that quality assurance needs to be performed by non-programming individuals. As a result, organizations create separate quality assurance organizations that do nothing but test the correctness and accuracy of programs. Quality assurance organizations typically accomplish this by designing what is known as Acceptance Test Planning. Acceptance Test Plans are designed from the original requirements, which allow quality assurance personnel to develop assurance testing based on the users’ original requirements as opposed to what might have been interpreted. For this reason Acceptance Test Planning is typically implemented during the analysis and design phases of the life cycle but executed during the Testing phase. Acceptance Test Planning also includes system type testing activities such as stress and load checking (ensuring that the application can handle larger demands or users) or integration testing (whether the application communicates and operates appropriately with other programs in the system), as well as compatibility testing, such as ensuring that applications operate on types of browsers or computer systems. Testing, by its very nature is an iterative process that can often create “loops” of redesign and programming. It is important to recognize that acceptance testing has two distinct components: first, the design of the test plans, and second, the execution of those acceptance plans.

Production

Production is synonymous with the “going-live” phases. Ultimately, Production must ensure the successful execution of all aspects of a system’s performance. During Production, there is the need to establish how problems will be serviced, what support staff will be available and when and how inquiries will be responded to and scheduled for fixing. This component of Production may initiate new Development and Testing cycles because of redesign needs (or misinterpreted user needs). This means that the original requirements were not properly trans- lated into system realities.

On the other hand, Production as a Life Cycle includes other complex issues:

1. Backup, recovery, and archival

2. Change control

3. Performance fine-tuning and statistics

4. Audit and new requirements

Backup, Recovery, and Archiving

Operational backup should be defined during the Development phases; however, there are inevitably backup requirements that need to be modified during Production. This occurs because the time it takes to complete data backup is difficult to predict. The speed at which data can be placed on another media is not an exact science and is based on the complexity of how data is selected and the method of placement to another location and/or media. The speed also heavily relies on factors such as disk input/output speed, network throughput (speed of communication network), database backup algorithms, and the actual intervals between backup cycles. Why does this matter? It matters because backups require systems to be “off-line” until they complete, meaning that the system is in effect not operational. Thus, the longer the backup process, the longer the system is off-line. A most popular example of the potential backup dilemma is overnight processes that must complete by the beginning of the next morning. If the process of backup will take longer than the allowed time, then analysts/designers need to determine a way that can condense the time-line. The alternatives might typically include limiting the actual data used to back up, or multiple devices to increase throughput, etc.

Recovery, on the other hand, is more about testing the quality of the backed up data, and determining whether the data stored can be recovered in the operational system. So, the first aspect is to see whether the necessary data needed to bring the system “back” can be accomplished. One hopes that recovery will never be necessary, but the most glaring exposure on quality assurance is to not really know whether the data we think we have, can actually be restored back to operational forms. For example, it is one thing to back up a complex database; it is another to restore that data so that the key fields and indexes are working appropriately after restoration. The only assurance for this is to actually perform a restore in a simulation. Simulations take analysis and design talent and need to be conducted on some intervals that ensure that backup data integrity is preserved.

Restoration and backup become even more comprehensive when portions are what is called “archived.” Archived data is data that is deemed no longer necessary in the “live” system, but data that may be required under some circumstances. So, data that is determined to be ready for archival, needs to be backed up in such a way that it can be restored, not to the live system, but rather a special system that will allow for the data to be queried and accessed as if it was live. The most relevant example that I can provide here, is accounting data from past periods that is no longer needed in the operations system, but may need to be accessed for audit purposes. In this example it might be necessary to restore a prior year’s accounting data to a special system that simulates a prior year in question, but that has no effect on the current system.

The Barker Case Method

Now that we have covered the essential tools that can be used to do analysis and design, it is worthwhile to look at a strategy or CASE method. One of the most popular approaches is known as the Barker Seven Phase Case method. The seven phases provides a step-by-step approach to where an analyst/designer uses the tools we have discussed. The phases are as follows: Strategy, Analysis, Design, Build, Documentation, Transition, and Production. The sections below provide an in-depth description of each of these phases.

Strategy

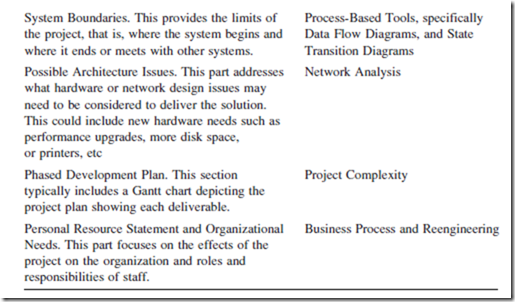

Strategy tends to include two major components: basic process and data flow models that can be used to confirm an understanding of the business objectives, processes, and needs. The deliverables Barker calls a “Strategy Document,” which can be correlated to what I have previously defined as a business specifi- cation. However, unlike just a pure business specification, the Strategy Document also includes an estimated budget, delivery schedule, project personnel, and any constraints and development standards that are required. Therefore, the Strategy Document contains all of the high-level issues and allows management to under- stand the objectives, timeframe, and budget limits of the project. It also is consistent with decomposition and sets the path to develop a more detailed specification. Below is an example of the Barker Strategy Document components:

Delivering the Strategy Document

Barker’s method provides a number of detailed steps to complete the Strategy Phase:

Project Administration and Management. This is defined as an ongoing process that occurs throughout the project. It can be related to a form of project management including reporting, control, quality assurance and various administrative tasks. The deliverable is typically in the form of progress reports, plans, and minutes of project meetings.

Scope the Study and Agree on Terms of Reference. This step is the first phase to agreeing on the actual objectives, constraints, and deliverables. It also includes the estimated number of interviews and how they will be completed (individual, JAD, for example), as well as specific staff assignments.

Plan for Strategy Study. This is a detailed plan for identifying specific staff resources and schedules for meetings.

Results of Briefings, Interviews, and Other Information Gatherings. This step engages a number of outcomes, including a functional hierarchy (Object Diagram) and rough Entity Relational Diagram. It becomes the first high-level modeling depicting the form of the architecture of the system.

Model the Business. This step includes a more detailed model of the business flows including process flows, data flows, and a more functional ERD. It also includes a glossary of terms and business units that are involved with the system flow.

Preparation and Feedback Sessions and Completion of the Business Model.

These are actually two steps: the initial planning for the feedback sessions, and the actual feedback sessions themselves. The first step requires preparation for outstanding issues to be resolved from strategy sessions and to determine how the feedback sessions will be conducted with key users and stakeholders. The feedback sessions usually result in changes to the models and a movement to the more detailed analysis phase of the project. Recommended System Architecture. This task summarizes the finding of the strategy and recommends a system architecture based on the assumptions and directions from stakeholders. This includes available technologies, interfaces with existing systems, and alternative platforms that can

be used.

Analysis

Analysis expands the Strategy Stage into details that ensure business consistency and accuracy. The Barker analysis stage is designed to capture all of the business processes that need to be incorporated into the project. Barker divides Analysis into two components: Information Gathering and Requirements Analysis.

Information Gathering: includes the building of more detailed ERD called an Analysis ERD (equivalent to what I called a logical model), process and data flows, a requirements document, and an analysis evaluation. This step also includes an analysis of the existing legacy systems. Much of the information gathering is accomplished via interviews with users. As discussed in the next chapter this is accomplished by understanding the user interface and determining whether to do individual and/or group analysis techniques.

Requirements Analysis: Once the information gathering is complete a detailed requirements analysis or specification documents must be produced. It contains the relevant tools as outlined in my analysis document, namely, the detailed PFDs, DFDs, ERDs and Process Specifications. In the Barker approach the requirements document may also include audit and control needs, backup and recovery procedures, and first-level database sizing (space needs).

Design

The Design Stage consists of the incorporation of physical appearances and other requirements that are specific to actual products, for example, coding and naming conventions. The typical design needs are screen layouts, navigation tools (menus, buttons, etc.) and help systems. The Design Stage must also ultimately achieve the agreed upon performance or service levels.

The ERD will be transformed to a physical database design. Detail specifications will be translated into program modules, and manual procedures to operate the system should be documented. In terms of screens, reports, and “bridges” that connect modules, various prototype designs can be incorporated to show users how the navigation and physical “look and feel” will occur during the user interface.

As previously discussed, Design is not a step that occurs without iteration. The Design Stage often iterates with analysis, where questions and suggestions from designers can raise issues about alternatives not considered during the analysis stage. The iterative cycle is best depicted by Barker’s diagram as shown in Figure 2.1.

What is critical about this design method is its interactive yet interdependence with non-application components such as network design, audit and control, backup and recovery design, data conversion, and system test planning.

Build Stage

The Barker Build Stage is defined as the coding and testing of programs. Much of this stage depends on the technical environment and the attributes of the programming environment, that is, Web, mainframe, mid-range, etc. The Build Stage involves the typical planning, design of the program structure,

actual coding, program methodology (top-down, structured coding, etc.), version control, testing approaches, and test releases.

The most convoluted component of the Build Stage is what represents program debugging versus what represents testing. Testing can also be done by programmers, but it is typically segregated into a separate function and group called Quality Assurance. Debugging can be defined as a programmer’s ability to ensure that his/her code executes, that is, does not fail during execution. Quality Assurance, on the other hand, tests the ability and accuracy of the program to perform as required. In other words, does the program, although executing, produce the intended results? Current trends separate QA departments and have programmers focus on coding. Much of the QA objective is to establish Acceptance Test Plans as I outline in Chapter 12 and this concept is more aligned with the Barker Build Stage.

User Documentation

This Stage in the Barker approach creates user manuals and operations documentation. Barker (1992) defines this as “sufficient to support the system testing task in the concurrent build stage, and documentation must be completed before acceptance testing in the transition stage” (p. 7–1).

The Barker User Documentation Stage is very consistent with my definition: significant parts of documentation must be accomplished during the strategy and analysis stages, when the principal aspects of the system architecture are agreed upon with users and stakeholders. The format of user and operations documentation is always debatable. What is most important is its content as opposed to its format. Barker’s method suggests that documenters design this Stage with the user or “reader” in mind. Thus, understanding how such users and readers think and operate the system within their domains is likely to be a key approach to providing the most attractive format of the document.

The user documentation should contain a full-reference manual for every type of user. Tutorials and on-line help text are always an important component in today’s Web-based systems. Another aspect of user documentation is to include information on error messages, cross-reference to related issues, as well as helpful hints. The operations documentation needs to cover day-to-day and periodic operations procedures. The operations guide includes execution procedures, backup and various maintenance processes that are necessary to sustain the system.

Transaction Stage

Barker describes the Transition Stage as a pre-live process that ensures that acceptance testing is completed, hardware and software installation is done, and critical reviews or “walkthroughs” have been finished. Another aspect of the

Transition Stage is to understand data conversion and its effects on whether the system is ready to go into production.

Since the original publication of Barker’s work, users today are much more experienced with the Transition Stage—simply because they have experienced it more often. As such, much of the resistance and ignorance to the importance of acceptance testing and validation do not occur at the frequency that they used to. Still, the transition process is complex. The steps necessary to go live in any new system requires an important integration with users and existing legacy applications.

Production

The Production Stage can be seen as synonymous with “go live.” It is the smooth running of the new system and all of its integrated components including manual and legacy interfaces. During this Stage, IT operations and support personnel should be responsible for providing the necessary service levels to users as well as bug fixing for programmers. Thus, the development staff acts as a backup to the production issues that occur.

Comments

Post a Comment