Data Warehousing

Data Warehousing

Introduction

This chapter focuses on how to extract information from database systems. This information will be used for decision support systems (DSS) purposes and typically be presented to the user in an on-line display format or on a report. As initially discussed in Chapter 13, the concept behind DSS is that it deals with data “after the fact,” meaning that the data is no longer in a production mode, but rather in a storage mode where it can be used for different forms of analytical processing. The major benefit of operating on processed data is that it cannot be changed and therefore can be accessed without concern for data integrity. Another salient issue is that because the data is not subject to change, it can be copied multiple times, allowing for some interesting performance improvements and “flattening” of the data stored in the relational database. The referential integrity that was attained in a production database does not maximize performance for the query of data for reporting purposes. The purpose of the chapter is to provide the details of creating complex warehouse systems.

In order to maximize the efficiency of accessing and analyzing data it is necessary to separate it from the production system. This is accomplished by creating copies of the data solely for decision support analysis. There are a number of different ways to do this separation. One way is data warehousing, where data is reformatted from one or more data sources and placed in a special repository for analytical processing. Another approach is to create data marts. While similar to a data warehouse, data marts are defined as more strategic in nature and more specifically organized to support the gathering of depart- mental data. Data warehouses can be composed of many data marts. Still another method is called data mining, in which multiple definitions of data from different systems are collected and analyzed for similarities. Data mining provides more artificial intelligence features and can perform advanced analysis against multiple data warehouses and data marts, especially when the data comes from multiple computer systems. All of these alternatives will be examined in this chapter, and its relationship to the analysis and design of ecommerce systems. In order to provide effective data warehouses, data marts, and data mining, it is necessary to understand how to obtain the data that is needed to populate these data structures.

A major component of creating effective repositories of data for DSS is how to extract the information through database query. In order to accomplish this effectively, it is important to understand the transaction processing system, since some transactions might need to be captured when they occur as opposed to after they have updated database files. Furthermore, the process of creating reports and screen displays needs to be included as part of the analyst’s specification. Some reports are pre-coded, while others are generated through user-initiated query applications.

Data Warehousing Concepts Revisited

Operational databases are those that are designed to track business events in real time. In operational databases, processing data usually correlates to cost while the completion of the data’s process relates to revenue. In Chapter 5, we saw that the data in operational databases is accessed at the detailed level where individual records are created, read, updated, or deleted (CRUD). Entities in the relational model are constantly updated to ensure that the integrity of the data is preserved and that no historical information on the entity’s previous status is stored.

Data warehouses, on the other hand, can capture both completed events and their original transactions. This allows data warehouse implementations with the ability to characterize more about how the data was produced at a much more detailed and granular level than usually available in operational databases. However, it is also important to remember that data warehouses are static snapshots of historical events. As a result, they are often not in sync with an operational database, which always reflects the current aggregate picture of the data. This has both advantages and disadvantages. From an advantage point of view, a data warehouse can contain multiple copies of the same data. Each set could represent the same data at a different moment in time, or could represent different views of the same data, or any combination of all of these. Figure 15.1 depicts the relationship between a data warehouse and the operational databases in an ecommerce system.

The issue of data synchronization between the operational databases and the data warehouses is significant. The ultimate consideration when designing a data warehouse system relates to the age of the data in the warehouse and how often this data needs to be refreshed. For example, if an accounting department needs to produce a report by the 15th of every month on the previous month’s activity, then the data refresh would need to occur sometime after the end of the previous month and before the 15th of the new month. On the other hand, if a daily report were needed on the previous day’s activities, then an evening refresh would be required. Data refreshment requires that the data from the operational database be copied to the warehouse. Unfortunately, this may not be the end of the data refresh procedure, since the format of the data in the warehouse may be very different from that of the operational system. Thus, there is typically a need

for the data to be reformatted so it can be fed into the warehouse structure directly. Because a data warehouse might be an aggregation of the operational data, it can be necessary to perform mathematical computations on the ported data to produce derived data information. You might recall that derived data is a violation of normalization and not allowed in the operational database. Therefore, data warehouses are denormalized models of the operational systems and will typically contain data elements that were removed through third-normal form implementation.

Performance Benefits of Data Warehouses

The advantages of developing a warehouse are many. First, it allows users to analyze data without interfering with the operational systems. This is a significant benefit because of the inherent problems associated with SQL. When queries are produced against an operational database to produce reports they often require what is known as database joins. These joins create intermediate databases until the final report is produced. Furthermore, the final database of these joins is in the format of the report being requested. This means that a report from an SQL query is really a sub-schema picture of the original database. Issuing complex queries that require multiple joins can easily tie up an operational database, thus creating serious confrontations between production personnel and support organizations. Therefore, the process of performing DSS requires SQL, which in turn hinders the performance of the production system. Figure 15.2 shows an example of the quantity of dynamic tables that can be produced as a result of a simple SQL join statement.

The above example shows how an SQL PROJECT statement creates a subset schema of columns of the parent entity. Thus, the sub-schema contains the same number of records, but less columns or attributes. The SELECT statement also creates another entity, which reduces the number of rows, but contains the same number of attributes as the parent database. Finally, the join combines the results of the two sub-schemas to produce a third entity, which is in the format of the report requested by the query. This sample shows how much overhead is involved with the production of a simple report. When voluminous data is involved the time to complete the query is even more significant. Indeed, some queries have

been known to run over 24 hours! Therefore, data warehouse solutions should be the preferred method for analyzing data rather than using standard SQL directly against production databases.

Concept of Multidimensional Data

Data warehousing has often been referred to as a three-dimensional way of representing data. The three dimensions are seen as a cube of data. Each cell is viewed as a box within an array. This cube represents one data warehouse aggregation (combination) and provides a number of ways to view the data. Figure 15.3 shows a data warehouse aggregation of three viewable dimen- sions of information. The relational table two-dimensional; it contains rows and columns, which is synonymous with records and attributes. The corresponding multidimensional cube reflects a three-attribute dimensional view of the data. The two-dimensional part is Orders and Items, with the third dimension being Markets. Figure 15.4 reflects another third dimension, the addition of the price

attribute to the array. From a reporting perspective, a user could query for Orders and Items, which would only use two of the three dimensions, or ask for Orders by Item within Market. To use all the dimensions available, the user could search on Orders, by Item within Market by Price.

These examples picture the data warehouse from a logical perspective, that is, the cube does not exist physically, just conceptually. From a physical perspective, data warehouses use a standard data structure called the star schema. The star schema contains the main table in the center of the star, and each node represents another dimension of the data as shown in Figure 15.5.

The star schema in Figure 15.5 allows for queries to be performed based on an Order. In many ways it resembles an ERD in an operational database. However, most star schemas start with a multidimensioned center (concatenated primary-key), so that query time is enormously reduced because there are less joins. Figures 15.6 to 15.8 show how the number of tables dramatically reduced as the number of primary key concatenations in the center table . It is important to recognize that more key concatenations in the center table greatly reduces the types of searches that can be performed on the data, that is, the flatter the schema the less dimensions available to view the data. For example, in Figure 15.8, there

are no nodes in the star schema because there are no dimensions. Therefore, a user can only query the data warehouse to view Items within Orders, by Market and Price. It is also important to recognize that Figure 15.8 contains no validation or look-up tables, which are normally used to validate the data of certain data elements. Data validation is not required in a warehouse architecture because the data is read-only, that is, records cannot be inserted, updated, or deleted. This is significant because this structure greatly improves data access

performance. The removal of validation tables eliminates index pointers and the need for multiple joins of tables, which simply reduces overhead and increases the performance of report queries. Figures 15.6 to 15.8 also depict the number of dynamic searches that are needed to produce one report. It is also feasible to design data warehouses that contain all four types of star schemas. This could occur if there were four departments who had three different fixed views of how they wanted the data to be represented to them.

Because there can be multiple copies of data in a data warehouse environment, there is an advantage to creating more schemas that represent the data as it is needed for a specific report or analysis, as opposed to designing an elaborate star schema that allows for multiple views of the data. The reason for this position is simple: why design a data warehouse that resembles an operation database? Another way of looking at this model is to conceptualize that every data warehouse is nothing more than a view of data for a specific user view. The more alternative searches you offer the user, the less attractive the model is from a performance perspective. However, multiple warehouses require multiple conversions from the operational systems. So, the analyst must balance the design taking into consideration both performance and overhead issues.

Data Warehouse Conceptual Design

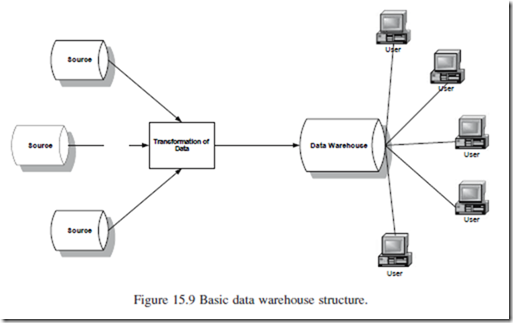

The first step in creating an ecommerce decision support system is designing the data warehouse structure. This structure or architecture provides a framework for identifying how data will be utilized within the ecommerce system. A basic data warehouse architecture is shown in Figure 15.9.

The fundamental characteristics of the basic data warehouse architecture are:

1. Data is extracted from operational databases, flat-files, and other data sources.

2. The data from all of the source systems is first integrated and transformed into another format before being loaded into the actual data warehouse.

3. The data warehouse can only be used for read-only activities for DSS.

4. Users obtain access to the data warehouse through a front-end application tool, usually supplied by the warehouse vendor.

Extracting Data from a Database Source

The data warehouse process starts with identifying the source of the data needed for the DSS. These will typically come from two places: (1) the operational databases that exist in the new ecommerce system and (2) the data files that exist in legacy systems. The most difficult part of the process is to identify the meaning of the data elements in each system so they can be transformed properly into the data warehouse. This can only be accomplished by first creating a central repository of the data—typically by using a CASE tool. Porting data into a central repository is easier when working with relational databases because they are better documented and can be reverse engineered into a CASE tool. However, legacy data is more challenging because of their lack of documentation and less conventional data formats. Notwithstanding the format of the source data, the process to define the operational data is time consuming but extremely valuable for the project to be successful. Many data warehouse products like

Sagent provide their own metadata dictionaries that can be used instead of a CASE tool. These metadata libraries store the relationships that exist among the different yet related databases in the production systems. Analysts also need to extract data from third-party products that are part of the operational system, sush as a weekly stock price file that is integrated in to the production system. In any event, most data warehouse systems can extract data from multiple databases from multiple systems.

Many operational systems can be categorized as “transaction-processing” based. This means that they are heavily geared toward capturing transactions that occur throughout the day. Typically, transaction-processing systems are those that take orders, ship them, and then record financial information. Transaction- processing systems have the following characteristics:

1. High transaction rate.

2. Constantly changing.

3. Data redundancy is avoided in order to ensure integrity.

4. Optimized indexes to ensure SQL query efficiency.

5. Two-phase commit architecture to ensure recoverability.

DSSs using data warehouses provide tremendous advantages for transaction- processing systems because they can reformat data into dimensions so that users can better understand the meaning and results of the transactions.

Staging and Formatting Extracted Data

As previously stated, a significant component of the data warehouse product is a load server that compiles the data and establishes the links among duplicate data elements. Furthermore, the load server allows for the definition of elements, especially for those attributes that have complex meanings. These attributes are called intelligent keys where portions of the attribute have special meanings. This is shown in Figure 15.10 for a Part Number data element.

The load server has the ability to store information about the Part Number so the warehouse can easily identify the data element’s components during a query. Overall, the load server prepares the raw data, and creates a new repository of information in the format required by the data warehouse schema.

Read-Only Activities

As previously stated, the data warehouse is a read-only copy of operational data. The philosophy is that operational data and DSS data are inherently different. Specifically, DSS provides easy-to-understand formats of data so that analytical information can be created to support better tactical and strategic business decisions within the company. These analytical processes require that historical data be kept in different views, unlike the single normalized view required in operational databases. Indeed, one database format alone cannot efficiently provide for both types of functional need. Thus, at the core of all warehouse products is a read-only database that represents the primary component of information analysis.

Front-End Query Tools

In most data warehouse systems, the data access infrastructure makes up the next most important component of the DSS architecture. This data access is composed of front-end query tools and application programs that provide useful and accessible decision support information from the data warehouse. Thus, analysts need to design the warehouse schemas, query logic, and applications to create a useful data warehouse environment that operates under the auspices of the production systems. The important component of application and query support are the third-party applications that are provided with the purchase of the data warehouse. Most warehouse products, like SAS, Sagent, and Oracle, all come with sophisticated developer tool kits that allow for the generation of advanced query and application development. These add-on application products do not, however, provide the proper analysis and design process to determine what they need to do.

Alternative Types of Data Warehouse Structures

Thus far we have only looked as what can be called a “generic” data warehouse architecture. However, there are more sophisticated design considerations when formulating an ecommerce enterprise warehouse system. Figure 15.11 depicts a slightly more complex data warehouse that loads data into what are known as business areas. A business area represents a defined segment of the organization and is based on perceived business similarities. These similarities could be based

on where income is derived, type of clients, etc. Therefore, each business has its own view and use for a warehouse. All of these multiple warehouses are derived from one enterprise warehouse that has been ported from the integration of multiple data sources.

In Figure 15.11, data is extracted from various source systems and integrated and transformed before being loaded into an enterprise data warehouse. This data is then restructured, redesigned, and moved into separate business area warehouses, which are then used for DSS. The major benefits of using a business area architecture are to:

1. Ensure that all business areas are deriving data from a central warehouse system.

2. Create sub-warehouses that can better facilitate the needs of smaller departments.

3. Provide access to different user communities. Internal, consumers, and customers all have different business area needs and access authorization criteria.

4. Ensure that the timing of the data is consistent across all warehouses.

In this way comparisons of data across the enterprise are consistent.

Another hybrid version of the business area model is to create separate area warehouses directly from the load server as shown in Figure 15.12 While this architecture does not contain a central enterprise data warehouse, it has all of the constructs that represent a true data warehouse. Obviously, the advantage is the time and space saving of creating an intermediate enterprise data warehouse. This model is advantageous when there is little need for a centralized warehouse and when there are multiple updates of the warehouse from multiple source files.

Still another intricate architecture is shown in Figure 15.13. This model creates an integrated relational database in third-normal form. The data that comes from source operational systems remains read-only; however, new data can be added to the integrated database directly from users. The purpose of these updates is to provide additional data that facilitates expanded decision support activities. Sometimes this process also helps to “clean up” bad data. Once the new data has been entered, the data is transformed into a data warehouse.

A Decision Support Life Cycle

A System Development Life Cycle (SDLC) for DSS focuses on data, as opposed to processing and functionality. As a result of the elimination of many process- oriented steps, the DSS life cycle is much faster and less complex than its traditional counterpart. The main activity for the analysts is to take data from various sources and platforms and provide multiple strategic views of the data in another infrastructure.

The phases of the ecommerce DSS are:

1. Planning

2. Gathering Data Requirements and Modeling

3. Physical Database Design and Development

4. Data Mapping and Transformation

5. Data Extraction and Load

6. Automating the Data Management Process

7. Application Development and Reporting

8. Data Validation and Testing

9. Training

10. Rollout

Phase 1: Planning

The planning phase in ecommerce DSS is very similar to the project life cycle and entails the creation of a project plan and realistic time estimates. Planning, like that for any project, includes defining the project scope, plan, resources, tasks, timeliness, and deliverables. Figure 15.14 depicts the project planning components.

Furthermore, the DSS will require changes to the network infrastructure. Therefore, there will be technical infrastructure design that needs to be implemented while formulating the proper DSS environment. The technical components include capacity planning, archival strategies, data refresh/update strategies, and operations and job scheduling.

Phase 2: Gathering Data Requirements and Modeling

During this phase the analyst must understand the business needs and data requirements of the users of the system. Because ecommerce systems are comprised of internal users, customers, and consumers, the data modeling requirements for data warehouses will vary depending on the different user views. Essentially, data requirements will involve the process of identifying what elements are needed for DSS by each group of users. The assumption should be that normalized databases exist, legacy links have been made, and intermediate transaction processing systems are in place. If a central repository of all

data elements is in a CASE product, then identifying the components of the warehouse will be much easier and productive. Unlike regular data gathering, analysts should be looking at what users need to see in the form of screen displays and printed reports. Thus, it is the output that identifies what is needed for the data warehouse. Therefore, the analyst does not need to use process models like DFDs to model the data warehouse, rather the specification will consist of query screens for data access, sample reports, and screen display views. The reports and screen displays need to be in prototype form so that users can validate that the information and format are correct. Another interesting aspect of data warehouse design is to determine what users might want to see in addition to what they have already identified. Basically most users will want to see the reports and screen displays that they are used to having from their existing system. However, data warehousing allows so much more, that the analysts need to consider what users might want to see given the robust power of the data warehouse query software. While this activity might seem counter to my previous position that analysts should never create requirements, I do believe that the analyst must at least reveal, usually through demonstrations, the power of the data warehouse to each user constituency. Obviously, this process may not be practical for consumers, except where the marketing department is involved with focus groups. Analysts must remember to inform users that data warehouses do not contain current data. While designing real-time data warehouses is possible, it is unlikely and difficult to implement.

What is most important, however, is not the reports and displays, but the strategy of designing the data warehouse (or data mart) architecture. Reports can usually be modified without great pain, but schema design is more complicated. This process requires that the analyst determine which type of warehouse designs to use. Remember that there can be multiple data warehouses produced from multiple sources, so the options for design are many-to-many as shown in Figure 15.15

Notwithstanding the relationships between data sources and data warehouses, it is important to focus on the schema design, that is, what is the design of the star schema. There are essentially three approaches. The first approach suggests that the data warehouse contain a star schema that resembles the report or screen display. This design was discussed earlier in this chapter. The advantage of this approach is that data is already placed in the format required so there is little need for data manipulation. As a result, the production of the reports and displays are very efficient. Furthermore, there is little that the user needs to do other than just selecting the option to produce the report. The bad news is that the warehouse is very limited because all it can do is produce the report. The economies-of-scale for creating the report in a data warehouse form may seem like overkill, unless the process of obtaining the data in one central repository has significant time saving for the user. Figure 15.16 shows a data warehouse report schema.

The second approach is to create sophisticated star schemas that support a grouping of needs. Each schema would contain a central table that provides a data repository required by a group of users. Each node would provide the supplemental data that might be needed depending on the user group and the report subject. The benefit of this model is somewhat obvious in that it allows warehouses to serve multiple needs and provides for more DSS analysis of the data. The downsides are also obvious; users need more knowledge on how to query the data and therefore need training and an understanding of what data is available for analysis. In addition, the performance of the reports and displays will vary depending on the level of sophistication of the queries that are afforded to the end users. Figure 15.17 depicts a star schema for group user query.

The third approach comes back to the earlier example of the enterprise level data warehouse. Rather than have multiple warehouses serving many users, one central complex star schema can be designed to afford the needs of an entire organization, group, or department. These types of data warehouses will contain the most nodes on the schema, and look very much like a third-normal form database. The advantage is that data is copied once per cycle to one data warehouse thus ensuring that the data all represents the same time period. The downside is that the warehouse becomes voluminous and requires significant data query manipulation in order to extract the needed information.

Another challenge in the movement of data from operations sources to data warehouses is derived elements. I previously discussed the handling of derived data as being a violation of third-normal form and a database redundancy. As a result, all derived data elements were removed from the production database. However, because data warehouses are read-only, there is no need to eliminate derived data. In fact, warehouses that contain derivations will be much more efficient for query and report production. The question to answer is how to populate derived data back into the database. If a CASE tool was used to produce the normalized operational database, then there is a good chance that derived elements might exist in the data dictionary. You might recall in an earlier chapter (Chapter 5) that many derived elements are first placed in the data dictionary and defined in a process specification. Thus, the identity of these derived data elements and their calculations can be extracted from the data dictionary. Unfortunately, such is not the case when dealing with legacy databases and flat-file systems. In these situations, the analyst must reconcile each report and screen display against the existing data dictionary to determine where derived elements exist. Furthermore, just identifying them is not enough— analysts must work with users to understand how they are derived. Once all derived elements have been discovered and defined they need to be added to the metadata dictionary usually provided with a data warehouse product.

The use of metadata (data that defines data) will be discussed later in this chapter; however, its use is important when integrating derived data elements in the data warehouse. The metadata repository will allow analysts to store the calculation formula. When the data warehouse is produced (or refreshed), a triggering operation will examine the data and calculate the derived values as set forth in the metadata repository. The process of calculating derived data elements is shown in Figure 15.18.

There may also be derived data elements that cannot be directly calculated from the supplied data sources. This means that the derived elements must be calculated outside the data warehouse domain and then imported directly into the data warehouse. This is shown in Figure 15.19.

In summary, the data gathering and modeling phase must address the following key questions:

1. What will be the number and design of the data warehouses?

2. How will data be reflected in the data warehouse model, particularly the derived data, which does not exist in the production database?

3. Is there a CASE tool that will allow for the transformation of derived data elements from a data dictionary to the data warehouse?

4. How often does data need to be refreshed?

Phase 3: Physical Database Design and Construction

The physical database design entails a number of steps that enable the database to operate efficiently. There are three main components involved in the physical design process: fact tables, dimension tables, and look-up tables.

Fact tables, which are sometimes known as major tables, contain the quanti- tative or factual data about a business. It is, in effect, the information for which users are doing the query (Poe, 1996). Fact table information is often numerical measurements of data that contain many attributes and sometimes millions of occurrences. For example, a marketing database can contain data on various sales for the company. Dimension tables or minor tables, on the other hand, are smaller than fact tables and hold descriptive data that reflect various dimensions of the facts—like month, year, region, etc. In the previous example, dimensions of sales could be product, markets, etc. Figure 15.21 reflects the marketing data warehouse with dimension tables.

Thus, the combination of fact tables and dimension tables provides for the basis of the initial star schema. This star schema can then allow users to scan the data, and with the appropriate database constraints provide interesting analysis of the information. Look-up tables are another type of dimension table. They provide fill-in information used to describe possible values that certain fact data need. They represent entities that are similar to third-normal form validation tables. An example of a look-up table the available colors of a particular model car. Figure 15.22 shows a combined star schema physical database that combines facts, dimensions, and look-up tables.

In many ways, much of the physical database design concepts discussed in this section could be considered part of the logic data modeling phase (Phase 2). While much of this design could be done during Phase 2, there are some related decisions that require more knowledge about the physical environment, that is, the actual data warehouse environment. Furthermore, the process of

denormalization is often accomplished during physical as opposed to logical database design. Beyond just database design, the analyst also needs to:

1. Identify the Actual Primary and Secondary Key Structures. Sometimes this could involve denormalized data elements.

2. Develop Aggregation Strategies. Aggregation is the process of accumulating fact data from other transactions. This means that a derived data element transaction could be generated that depicts the results of many detailed transactions.

3. Creating Indexing Strategies. Indexes are separate tables that are internally created to improve look-up performance. An index is an indirect address that points to where specific information exists in the database, without having to search every record. Figure 15.23 depicts an index look-up table. Any field can be indexed so that query speed to access that information can be dramatically increased.

4. Develop Partition Tables. Partitioning is the process of breaking up physical databases into many different storage areas. Logically, these multiple partitions are treated as one database, but physically are distributed on many different areas of a disk, or across multiple physical hard disks. The benefit of partitioning is purely performance. Parti- tioning of data maximizes performance of the data warehouse when particular parts of the data are needed by specific users. So, in theory, two users can be using the same logical data warehouse, yet be accessing two separate physical partitions of the same database.

5. Performing Capacity Planning. Analysts must determine the amount of storage space and processor speed that they will need in order to provide an efficient data warehouse environment. Analysts should not do this configuration alone; network architects must be involved to assist in the determination as to what processor types might best handle the load and perceived growth of the data warehouse. The key input needed from

the analyst is the number of records that will be estimated in each data warehouse. There are two components that are needed to determine space requirements. The first, which was covered in an earlier chapter, calculates the size of each data warehouse record by multiplying the total number of maximum characters in each attribute within a record. By maximum I mean the largest size for all VARCHAR2 (variable length) data elements. The result of this calculation will establish each record size. The analyst needs to then calculate the database size by multiplying this total record size by the estimated number of records in the data warehouse (see Figure 15.24).

This last step might not be that easy, but sometimes estimating maximum data warehouse sizes (i.e., the largest it could be) is the safest calculation. Furthermore, analysts must take into consideration the needs for temporary storage that are required during SQL JOINS, PROJECTS, and SELECTS of data from tables. Remember that SQL-based queries generate dynamic database tables for reporting. Thus, analysts need to consider doubling or even tripling the storage requirements of the host data warehouse. This is why partitioning can be so effective; it allows network architects to scale storage by attaching more physical hard disks. The issue of how many processors to have, the memory size, and disk access speeds needs to be determined by the proper network professionals, but heavily based on the input supplied by the analyst.

Phase 4: Data Mapping and Transformation

The data mapping and transformation phase is one where the analyst must locate the source of the data in the operational systems, do analysis to understand the types of data migrations and transformations that need to occur, and map the source data to target data warehouses. This phase ultimately determines what the source data will be, how it will be converted, and where the data will be ported. The specific steps within this phase are as follows:

1. Defining the Data Sources: Much of this activity can be accelerated if the analyst has loaded the data elements of each system into a central CASE tool repository. Other factors are the extent of legacy data that exists in the system, and the nature of how the data is stored. Furthermore, the amount of data redundancy and derived data elements all play a factor in the time requirements to ascertain where the data is and how it is defined.

2. Determining File Layouts: Once data sources have been defined, the analyst must clearly understand the file layouts of these systems and how the files interrelate with each other within a particular source repository. For example, a file that contains fact data may be linked to another table in the same system that provides validation information. Thus, the analyst needs to understand the schema of each source system. If the source is a relational database, then the best approach is to generate (if not available) an entity relational diagram (ERD). The ERD will expose the referential integrity that exists at the database level and expose those relationships that have been implemented at the application level. It might also expose data redundancies. If the system is a flat-file legacy application, then the analyst should print out the file description tables (particularly if the system is written in COBOL). The file description tables will identify all of the data elements in each file. By creating a simple template or utility program, analysts can determine where duplicate elements exist among these flat-files. This will allow the analyst to understand how the files link to one another, where there are redundancies, and how to schematically look at the system from a dimensional and relational perspective.

3. Mapping Source Data to Target Data: Once the source systems and schematic have been identified, the analyst needs to map or identify the source data to the data warehouse star schemas. This can be accomplished by creating a matrix that shows source elements and their attributes as well as their corresponding data warehouse data elements. Figure 15.25 shows a sample matching source-to-target matrix developed using a spreadsheet program.

4. Develop Transformation Specifications: The above matrix is only one component of what is called the Transformation Specification. Another name for a Transformation Specification is Conversion Document. A Conversion Document provides the requirements for a programmer to develop a utility program that prepares, moves, and transforms source data to a data-staging file. The data-staging file is an intermediate data store that is created for actual transport to the data warehouse.

5. Reviewing the Update Cycle: This process establishes the refresh cycle required for each data warehouse. Refresh cycles define when a cycle begins, when it ends, and how long it runs. It is also important for the analyst to have some idea about how long a refresh cycle might take. This can be determined best by running tests on sample source files and then forecasting the total update time based on the results of the test. The determination of conversion time could be critical since the update cycle needs to complete prior to the user wanting to see the data results. Therefore, if an update cycle runs every 24 hours, then the update cycle needs to complete by the beginning of the next morning. While this might sound like plenty of time, many large-scale update cycles can take substantial time to complete. The time to complete also heavily depends on the amount of data manipulation that occurs during the conversion of data from source-to-target.

6. Developing Strategies for Archival: This step entails the decision of what portions of warehouse data can be archived or deleted from the DSS. Typically this becomes more of an issue when looking at transaction-based information. The decision is to determine when dated transactions, which are used to formulate results of the data, are no longer providing enough value to keep them actively stored in the live data warehouse. The reason for purging certain transaction is because of the performance degradation that it causes during warehouse queries. Sooner or later, historical transaction data needs to be removed from the active warehouse. Analysts have two options. Option one is to generate a summary transaction record that might represent a group of detailed transactions. This means that the summary transaction would provide a view of the meanings of the detailed transactions. Figure 15.26 provides an example of representing detailed transactions in a summary record. If this option is selected then the detailed transactions can be deleted or archived. The creation of summary records may also require that specific program changes be made to query applications so that a summary record can be appropriately identified from a detailed transaction. The second option is to just create a separate file of purged transactions that can be accessed using a different set of query applications. This purged

file would typically be resident on a separate processor so that it would not conflict with normal data warehouse activities. Regardless of which option is used, the analyst needs to create specifications that identify the conditions for purging data and where the data is to be stored. Both options also require the development of specific query applications that can access purged data when needed.

7. Review Security Requirements: Once the data warehouse is in place, the analyst must integrate security requirements into the specification. Unlike many security issues, because data warehouses are read-only, there are no concerns about the creation of illegal or dangerous records. However, data warehouse information is still proprietary and could provide valuable information for those with malicious or illegal intentions. Thus, securing data warehouses from unauthorized use is a key component of data warehouse design. This is especially important because most access to data warehouses in ecommerce systems will occur via the Web, either from an Intranet or an Internet. So who has access to what features in a Web-based data warehouse query becomes very important in the design of the warehouse system. Much of the design issues covered in Chapter 13 are relevant in data warehouse development as well. Therefore, analysts should review the security requirements of the data warehouse using the same approach as in any other system.

8. Capacity Plan Review: Once the data mapping and conversion methodologies have been completed, the analyst will have a much better perspective on the specific capacity requirements. This step simply suggests that the capacity planning and space determination is an iterative process in which the analyst and network architects will fine- tune the capacity needs as each phase in the life cycle is completed.

Phase 5: Populating a Data Warehouse

This phase involves the actual extraction, conversion, and population of the target data warehouses. This is typically accomplished with a combination of conversion and update software supplied with the data warehouse vendor’s product, and with the development of specific conversion programs designed by the analyst. The steps involved with completing the population of data warehouse data are as follows:

1. Loading and Staging the Data: This step may involve an intermediate program that begins the process of building a repository of data where the data warehouse software will extract the information from. While many data warehouse products will allow for the loading of data directly from an operational database or file system, this is often not recom- mended. Accessing operational data is usually not a best practice for data architects.

2. Converting and Integrating Data: This step entails the execution of the actual conversion programs and the integration of multiple data sources. The process involves the confirmation that multiple data source migrations have been completed and the execution of one or more conversion programs (that migrate the data as per the specification) have occurred. This step should result in the formation of a warehouse load server that is ready for testing before being moved to the live data warehouse. Figure 15.26 shows the process.

3. Testing and Load: The Test and Load phase is the final step to creating the data warehouse. The test part of this step is to provide various reconciliation results that provide assurance that data has been converted to specification. For the most part, the testing should be consistent with audit-trail philosophies including the verification that the number of records read, written, and transferred is correct. If records are rejected for whatever reason, these should also be reported. The load portion of this step involves the actual porting of the data into the warehouse for use by users. There should also be an audit trail report specifying that the process of final load has been successful.

4. Capacity Review: Once again, the analyst should review the capacity needs of the system after the final data warehouses have been created, since the storage at the time of completion will represent the actual storage prior to query operations on the data.

Phase 6: Automating Data Management Procedures

While much of the work has been performed to generate the data warehouse, there is a need to create automated processes that refresh the information on a periodic basis. The periods may vary depending on the type and number of data warehouses that have been designed. Thus, once the first data extraction is completed and data warehouses are created, the analyst needs to design automated utilities that migrate new data to a preexisting data warehouse. These utilities must be automatically activated based on time and/or conditions. They must also activate the application operations that are part of the life cycle of refreshing data, namely Data Mapping, Conversion, Extraction, and Load. When subsequent or ongoing refresh is in place, data will be replaced in the load server and ultimately in the data warehouse. The procedures for verifying that this process has occurred properly also involve the creation of utility applications that report on the outcomes of each automated step, each time the refresh occurs.

There also needs to be an automatic backup and restore process should data become corrupt or damaged because of a hardware failure. There is typically a backup/restore feature available in data warehouse products, as well as from third-party vendors. Restoration procedures must be designed and tested in order to ensure that data warehouse information can be restored correctly and within the time constraints set forth by the user communities. Of course, all automated processes need to be tested, therefore, the analyst needs to design test case scenarios that will assist in the verification that data can be backed up and restored correctly.

Phase 7: Application Development and Reporting

This phase focuses on the delivery of output from the data warehouse. DSS application development is usually accomplished by using special data access tools to design and pre-build reports. Indeed, the tools selected to generate reports are critical to the success of any data warehouse. Analysts need to determine the environment to support data access and information analysis rather than just selecting a front-end software package. There are several steps that are required to understand the complexities of providing a reporting environment for users in an ecommerce environment. The first issue to address is the ways in which users can receive information from the data warehouse. There are four types of reporting as follows:

1. Parameter-Based Ad Hoc: This allows users to enter particular information that will act as a parameter into the report application. Parameters often consist of date information, or domain constraints like department or group. Users, while changing parameters, are not creating new reports, but rather modifying existing reports within a pre-defined range of choices.

2. Display Access: This involves the display of reports on the Web or some other central place on the system. The important concept here is that reports are generated as needed but assumed to be effective in a view-only mode.

3. Complete Ad Hoc: The user interacts directly with the data warehouse using the query tools that support the warehouse product. Thus, users will need to be trained on how to issue queries, and how to manipulate the data in the warehouse. While this option provides the most versatility, it is also the most difficult report to use successfully. The problem is whether users can really master the query tool, be knowledgeable on how the data is stored, and can appropriately test their results. Indeed, the largest risk for users is generating reports that are not accurate.

4. Hard Copy: These are predefined and developed by the analyst. They typically have a fixed format and are printed and forwarded to the appropriate requester.

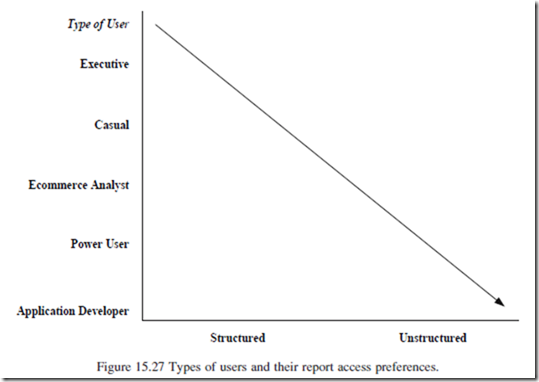

There are also a number of ways that users need to navigate through an ecommerce DSS. This is based on user needs, similar to my earlier definition of user categories. The three primary methods are:

1. Executive: Allows executives to get predefined reports quickly and on a regular basis.

2. Structured Decision Support: Contains predefined and ad hoc reports and outputs. Thus, structured decision support provides another level of analysis offered to the user community. This hybrid approach is usually required by middle-tier users like line managers and supervisors, where there is a clear need to generate what-if analysis in a dynamic day-to-day environment.

3. Unstructured Decision Support: These users have access to all types of reports, but prefer to design ad hoc reports from scratch and use them when they are needed for a particular analysis. These users are more activity-based on the information they need.

The level and categories of users vary in how they use reports. What reports are needed by users also depends heavily on whether they are internal, customers, or consumers. Typically, internal users are those who can be trained to use unstructured techniques to access data warehouse information. Figure 15.27 represents another view of how certain types of users utilize report options.

Report Access and Analysis

A data warehouse is not an automated method of getting the information you want without working to find it. Users should not be disillusioned about what a data

warehouse product will afford them to do. Misunderstanding the commitment that data warehouses require, both from designers and users, is a critical factor for success (Poe, 1996). Figure 15.28 depicts the sequential steps that users should follow when using DSS and when analyzing their data.

The viewing of the reports is another important component of analysis of DSS. Users may want to specify what format they want to view the data. Thus, analysts need to provide options for users where they can select from a fixed menu of display options. While tabular and cross-tabular reports are most common, graphical output is also a common way of analyzing what reports are telling users about their data. Figure 15.29 shows various types of data warehouse report presentations.

Listed below are the most common report functions that DSS should provide to users:

1. Changing the axis: This allows users to swap rows and columns.

2. Changing sort order.

3. Adding and deleting subtotals and other information breaks.

4. Creating color reports with highlighting of certain variables.

5. Format options for fonts, styles, sizes, and colors.

6. Graphical display of information should support line graphs, dimensional and stacked bars, pie, scatter, bubble, tape, and area.

7. Ability to change graph axis labels, colors, and titles.

Data Warehouse Query Tools

There are a number of types of queries that allow for the production and viewing of the information that has been defined herein. There are four types of query tools:

1. Report Writers: These are third-party products that access the data warehouse directly and produce reports based on SQL type queries. Examples of third-party report writers are Crystal Reports and Cognos (see Figure 15.30).

2. Multidimensional Database Management Systems (MDBMS): These tools create a subset database that can be populated by both operational systems and data warehouses. These databases are usually multi- dimensional and are often defined as Online Analytical Processing systems (OLAP). They allow for specialized reporting of data in an aggregate form that can support advanced data mining activities (see Figure 15.30).

3. Advanced DSS Tools: Advanced tools are very similar to report writers except they support more advanced query capabilities and dimensional analysis. They specifically support access to multiple data warehouses from one query.

4. Executive Information Systems (EIS): EIS represents a higher level of DSS. These systems contain advanced metadata facilities that can create sophisticated and predefined analysis of data. EIS also supports Artificial Intelligence activities, which are fundamental infrastructures to the support of trend analysis and decision making alternatives (see Figure 15.31).

Phase 8: Data Validation and Testing

While I have mentioned that testing must occur throughout the Data Warehouse implementation, there is a need to have a central test plan strategy. Although each phase has a quality assurance process, the entire data warehouse life cycle requires integrated test scripts. These scripts establish what the expected results should be from each previous life cycle step. Sometimes this is best accomplished by having reports that provide reconciliation of what the warehouse contains. However, analysts should also suggest that users review the output reports. Users should be encouraged to hand check certain reports until they are satisfied that the system is calculating and collecting information based on their requirements. All too often we see users who just accept what the output of a report tells them!

Furthermore, analysts should plan for change—for change is inevitable with DSS ecommerce systems. Change is not only modifications to the reporting, it is the addition or changing of what data needs to be supplied to the data warehouse and in what forms. In addition, new systems are added and need to be integrated into the overall data warehouse schema. With this in mind, analysts need to design and maintain sophisticated testing systems that can be reused each time a change is made to the warehouse infrastructure or transformation process.

Phase 9: Training

While this might not be the actual responsibility of the analyst, it is important to plan for the training of users with the objective of teaching them how to work with the data warehouse. Notwithstanding that pre-designed reports do not require much data warehouse knowledge from the users, most systems will always have “super users” who want to do advanced analysis on the DSS. The training phase of the DSS life cycle includes the following considerations:

1. Understanding the scope of information in the data warehouse.

2. Training on the front-end tools and how to write queries.

3. Understanding of how to use existing reports and how to modify them.

4. Train the trainer concepts so users can eventually own the responsibility of training new employees or other users.

Phase 10: Rollout

This phase essentially involves the process of going live. It requires the actual deployment of the data warehouse into the production network environment, creating user support infrastructures including adding new users, creating the physical procedures for users to request new reports and options, providing the procedures for backup and restore, and creating a team to troubleshoot problems that inevitably occur early in the DSS rollout.

Understanding Metadata

Metadata is data about data. Metadata is the fundamental component that allows data warehouse systems to provide the complex information that users require. There are two primary forms of metadata: operational and DSS. Operational metadata contains the information about where the data is in the production systems and/or in the staged database system prior to conversion. The DSS metadata establishes a catalog of the data in the data warehouse and maps this data to the query applications. Figure 15.32 depicts the metadata architecture.

Therefore, the metadata infrastructure provides the front-end users with the ability to manipulate the data without working directly with the data. That is, the user works directly with the metadata, which in turn operates on the data warehouse attributes. Thus, the metadata provides a higher level of abstraction about the data. This abstraction allows for the ease of use of operating with the database.

Comments

Post a Comment