Logic Data Modeling Tools

Logic Data Modeling Tools

Logic data modeling is a set of procedures that examines an entity to ensure that its component attributes (data elements) should reside in that entity, rather than being stored in another or new entity. Therefore, LDM focuses solely on the stored data model. The LDM process is somewhat controversial and subject to various opinions; however, listed below are the common steps used by many professionals in the industry:

1. identify major entities

2. select primary and alternate keys

3. determine key business rules

4. apply normalization to 3rd normal form

5. combine user views

6. integrate with existing models (legacy interfaces)

7. determine domains and triggering operations

8. de-normalize carefully

Normalization Defined

Perhaps the most important aspect of logical data modeling is Normalization. Normalization, at a high level, is generally defined as the elimination of redundancies from an entity. Although this is an accurate definition, it does not fully convey the true impact of what normalization is trying to accomplish, and more important, what it means not to apply it to your model.

We can see from the above list that normalization is only one step in the LDM process, albeit the most critical. I have found, however, that there is an advantage to understanding normalization first, before trying to understand the entire LDM process.

Before we explain this any further it is necessary to define the three forms of normalization.11 These forms are known as 1st normal form, 2nd normal form and 3rd normal form. Normal form is typically abbreviated “NF.” Each normal form should be dependent on the completion of the previous form, therefore, normalization should be applied in order. Below are the rules that satisfy each normal form:

1st NF: No repeating elements or repeating groups 2nd NF: No partial dependencies on a concatenated key 3rd NF: No dependencies on non-key attributes

Normalization Approaches

If DFDs have been completed, all data stores that represent data files become the initial major entities or the first step in the LDM. Therefore, if process models are done first, the selection of major entities is easier. If process models are not used then the analyst must hope that a legacy system exists from which the data can be converted and then tested for compliance with normalization. If no legacy system exists, you will need to examine forms and try to determine what constitutes an entity.12 The following example assumes that a DFD exists and shows that the process creates a data store called “Orders.” This data store represents the storage of all orders sent by customers for the various items that the company sells. Figure 6.1 is a sample of a customer order and the data elements that exist on the form.

We begin this normalization example assuming that steps 1, 2 and 3 of the LDM are complete (Identifying Major Entities, Selecting the Primary and Alternate Keys, and Identifying Key Business Rules, respectively). Our major entity is the data store “Orders” and its Primary Key is “Order#.” There are no Alternate Keys (a concept discussed later). The first aspect to point out is that the data store from the DFD becomes an entity in the LDM process (see Figure 6.2).

To show how normalization works, Figure 6.3 depicts the Orders entity with its primary key Order# inside the box. The non-keyed attributes (data elements are often called attributes of an entity when performing LDM) are shown as dependent cells. These cells or attributes basically belong to or “depend on” the entity and do not affect the outcome of the normalization process. However, a dependent attribute may become a key attribute as a result of normalization. We will see this in the example shown in Figure 6.3.

The form shows that a repeating body of elements exists in the area of items ordered. That is, many items can be associated with a single order. We call this a repeating group, and it is shown within the box in Figure 6.3. Looking at this entity, we must first ask ourselves: are we in 1st NF? The answer is clearly no

Figure 6.1 Sample customer order form.

Figure 6.2 This diagram shows the transition of a data store into an entity.

Figure 6.3 The Orders entity and its associated attributes.

because of the existence of a repeating element or group. By showing the box surrounding the repeating body, we are, in effect, exposing the fact that there is a primary key being treated as a non-key attribute. In other words, there really is another entity embedded within the Orders entity. Whenever a normal form is violated, the solution is to create a new entity. If it is a 1st NF failure, the new entity will always have a concatenated primary key composed of the primary key from the original entity joined with a new key from the repeating group. This new key must be the attribute in the group that controls the others. All other attributes will be removed from the original entity and become part of the new one, as shown in Figure 6.4.

Figure 6.4 now shows a new entity: Order Items which has a concatenated primary key composed of the Order# from the original entity and the Item ID which is the identifier of each item that is contained within an order. Note that all the non-key (dependent) attributes now become part of the new entity. First NF now allows the system to store as many items associated with an order as

Figure 6.4 This diagram shows the entities in 1st NF.

required. The original file would have required an artificial occurrence to be included in each record. This would result in (1) order records that waste space because the order had fewer items than the maximum, and (2) the need to create a new order if the number of items exceeds the maximum allotted per order in the file.

Our entities are now said to be in 1st NF. Don’t rejoice yet—we must now check 2nd NF. Second NF applies only to entities that have concatenated primary keys. Therefore, any entity that is in 1st NF and does not have a concatenated primary key must be in 2nd NF, simply by definition. As a result, the Orders entity must be in 2nd NF. The issue becomes the Order Items entity. To check for 2nd NF compliance, the analyst needs to ensure that every non- key attribute is totally dependent on all parts of the concatenated primary key. When we run this test on Item Name, we see that it depends only on the Item ID and has no dependency on Order#. It is, therefore, considered a 2nd NF violation. Once again, a new entity must be created. The primary key of this new entity will always be the key attribute for which elements had a partial dependency. In this case, it is Item ID. All non-key attributes dependent only on Item ID will become part of the new entity, which we will call “Items.” Note that non-key attributes “Quantity” and “Amount” stay with the Order Items entity because they are dependent on both the order and item keys. To be clearer, the quantity of an item ordered will be different for each order (or the same by coincidence). The result now is three entities as shown in Figure 6.5.

We are now in 2nd NF. Once again, don’t rejoice too early—we must test for 3rd NF. Third NF tests the relationship between non-key attributes to determine if one is dependent on another, that is, if a non-key attribute is dependent on another non-key attribute. If this occurs, then the controlling non-key attribute is really a primary key and should be part of a new entity. If we look at the Order

Figure 6.5 This diagram shows the entities in 2nd NF.

Figure 6.6 This diagram shows the entities in the first phase of 3rd NF.

entity, we will find that both Customer Name and Customer Address13 are really dependent on the non-key attribute Customer ID. Therefore, it fails 3rd NF, and a new entity is formed with the controlling attribute, now a key. The new entity is Customers (see Figure 6.6).

Note that the Customer ID also remains as a non-key attribute in Orders. All 3rd NF failures require this to happen. The non-key attribute Customer ID is called a foreign key and enables the Customer entity and Order entity to have a relationship.

We may ask ourselves if we have now reached 3rd normal form. The answer is still no! Although not intuitively obvious, there are still non-key attributes that are dependent on non-key attributes. These are, however, slightly different from the Customer ID case. For example, if you look at the Order Items entity, there is a non-key attribute called Amount. Amount represents the total of each item included in an order. Amount is a calculation, namely Quantity ∗ Unit Price.

Attributes that are calculations are said to be dependent and are known as a derived value. One can see that Amount is indirectly dependent on Quantity in the entity Order Items. When non-key attributes are deemed derived and thus redundant because their values can be calculated at any time, they are removed from the entity.14 The same problem also exists in the entity Orders. Subtotal and Total Due are also derived and therefore must be removed from the logical model. Our 3rd NF LDM is now modified as shown in Figure 6.7.

At this point we believe that 3rd NF has been reached and we should now produce the Entity Relational Diagram (ERD), which will show the relationships (connections) of one entity with others. The ERD is fairly simple to draw, and the analyst should start by putting each entity into the accepted ERD format (see Figure 6.8).

The ERD in Figure 6.8 depicts the relationships of all the entities. Let’s see what it tells the analyst:

• The Order entity has a “one and only one to one to many” relationship with the Order Items entity. That is, there must be at least one item associated with an Order, or the order cannot exist.

• The Items entity has a “one and only one to zero to many” relationship with the Order Items entity. The difference between this relationship and the one established with Orders, is that here an Item does not have to be associated with any order. This would be the case for a new item that has not yet been ordered by any customer.

• The Order Items entity has a primary key that is comprised of the concatenation of order# and item_id, which are the primary keys of Order and Items, respectively. The Order Item entity is said to be an “associative” entity, in that it exists as the result of a many-to- many relationship between Order and Items. Specifically, one or many orders could be associated with one or many items. A many-to-many relationship not only violates normalization, but creates significant problems in efficient SQL15 coding. Therefore, associative entities are 15 SQL stands for Structured Query Language. SQL is the standard query language used in relational database products. SQL was invented by IBM in the early 1980s.

Figure 6.7 This diagram shows the entities in the second phase of 3rd NF.

created to avoid these relationships. First NF failures often result in associative entities. It should also be noted that the primary keys of Order Items are shown with an “(FK)” symbol. This model, which was developed using Popkin’s System Architect CASE tools, shows all primary keys that originate in a foreign entity with a “(FK)” notation. Although this is not an industry standard, software products vary in their depiction of derived primary keys.

• Customers has a “one and only one to zero-to-many” relationship with the Order entity. Note that a Customer may not have ever made an order. In addition, this model shows customer_id in the Order entity as a non-key attribute pointing to the primary key of Customers. This is the standard definition of a foreign key pointer.

Figure 6.8 The entities in ERD model.

What Normalization Does Not Do

Although we have reached 3rd NF and completed the ERD, there is a problem. The problem is serious and exists in the case of a change in Unit Price. Should the Unit Price change, there is no way to calculate the historical costs of previous order items. Remember that we eliminated Amount because it was a derived element. This poses an interesting problem in that it appears that normalization has a serious flaw—or does it ? Before making this evaluation, let’s first determine the solution to this problem. Does replacing Amount in the Item entity solve the problem? Although we could “back into” the old price by dividing the Amount by Quantity, it would not represent a true solution to the problem. Looking closer, we will ultimately determine that the real problem is a missing attribute: Order Item Unit Price or the price at the time of the order. Order Item Unit Price is dependent on both the Order and the Item and is therefore wholly dependent on the entity Order Items. It becomes a non-key attribute of that entity, which means our ERD must be modified. It is important to note that Order Item Unit Price is not a derived element. It is only related to Unit Price from the Item entity at the time of the order16; thereafter they are separate elements. Because of this phenomenon, the analyst has actually discovered a new data element during normalization. To be consistent with the rules, this new data element must be added to the ERD and the Data Dictionary (see Figure 6.9).

Figure 6.9 ERD with the addition of order_item_unit_price.

The question now is: what does all this mean? To put the above problem and solution into perspective is actually quite simple: normalization will not work completely unless all attributes are included during the process. While normalization itself will not uncover missing elements, the good news is that the normalization process and the ensuing ERD did uncover the problem! If the analyst stands to learn anything from this example, it is that normalization does not ensure that the model is complete nor is it a panacea for the art of data modeling. It is simply another tool that the analyst can use to reach the logical equivalent, albeit a very important one.

The Supertype/Subtype Model

A troublesome database issue occurs in the LDM when there are records within an entity that can take on different characteristics or have many “types” of data elements. By “type” we mean that a portion of the data elements in a specific record can vary depending on the characteristic or identification of the record within that entity. Another way of describing this issue is that a portion of the elements contained within a given record can be different from other records of the same entity depending on the type of row it represents, or as it is defined as a “subtype” of the record. A subtype, therefore, is the portion of the record that deviates from the standard or “supertype” part of the record. The “supertype” portion is always the same among all the records in the entity. In other words, the “supertype” represents the global part of the attributes in an entity. A diagram of this phenomenon can best explain this concept in Figure 6.10:

Figure 6.10 Supertype/subtype relationship.

The significant difference between a subtype and just a type identifier (using a foreign key) is the existence of at least one nonkey attribute that follows just that subtype part of the record. Thus, the main reason to design a supertype/subtype database is the existence of multiple permutations of different elements that exist in just certain types of records. Keeping all the permutations of elements within a record format can be problematic. First, it can waste storage, especially if each subtype has a significant number of unique elements. Second, it can create massive performance issues, particularly as it relates to the querying of data. Using the above example, we show two potential ways to store the data. The first (Figure 6.11) represents a simple solution with all the permutations existing in the record. The “type” of record is identified using a foreign key pointer to an entity called “Educator Type.”

Figure 6.11 Educator ERD using one entity with foreign key identifier.

This solution, while having only one main entity, wastes space because all elements are not used in any one “type” of record. The user must be aware of the meanings of the type identifier and remember which elements exist for each type of educator. This method of logic data modeling violates the concepts of normalization, and entrusts the integrity of values of elements in an entity to either an application program’s control, or to the memory of the user. None of these choices are particularly dependable or have proven to be a reliable method of data dependency.

On the other hand, Figure 6.12 provides a different solution using the supertype/subtype construct.

This construct depicts each type of educator as a separate entity, linked via a special notation in the relational model, known as the supertype/subtype relation. The relationship is mutually exclusive, meaning that any global supertype can have only one of the three subtypes for any one supertype occurrence. Thus, the relationship between any row of a supertype must be one-to-one with a subtype. The supertype/subtype model creates a separate subtype entity to carry only its related elements that are unique to its subtype.

There are two major benefits to this alternative structure. First, the construct saves storage, in that only elements that exist in a subtype are stored in each entity. Second, the subtype information can be directly addressed without first accessing its supertype. This is because each permutation subtype entity contains the same primary key as the parent. Having this capability is significant because a user can automatically obtain the unique information from any subtype without having to search the entire database, as would be the case with one entity holding all the elements (as in Figure 6.11). The benefits from this construct are particularly advantageous when the number of rows among the subtypes varies significantly. Suppose, for example, there are 5 million educators in the database.

Figure 6.12 Supertype/subtype entity construct.

The Educator database would therefore contain 5 million rows. Four million of the educators are high-school teachers, and as such, the High School subtype entity has 4 million records. Nine hundred thousand educators are professors and the remaining 100,000 educators are deans. Therefore, the Professor database and Dean database have 900,000 and 100,000 records, respectively. In the supertype/subtype configuration applications could access the sample of each type without searching through every record. This improves not only performance, but also access speed, in that each entity is separate and would not interfere with access to the other.

The supertype/subtype construct is not limited to mutual exclusivity; it can support multiple subtype permutations. For example, suppose an educator could be a high-school teacher, college professor, and a dean at the same time, or any combination from among the three types. The model would be modified to show separate one-to-one relationships as opposed to the “T” relationship depicted in Figure 6.13.

Supertype/subtypes can cascade, that is, can continue to occur within the subtypes. Take the following example in Figure 6.14.

The above example reflects how subtypes can continue to cascade. Notice that the same primary key continues to link the one-to-one relationships of the entities. In addition, Figure 6.14 also exposes another interesting aspect of the supertype/subtype model relating to a subtype that has no non-key attributes. Such is the case with the subtype entity Adjunct Prof. In this situation, the empty entity serves only to identify the existence of the subtype, but has no other distinct elements that are attributed to its occurrence. The use of Adjunct Prof, therefore, was created only because the other two subtypes (Tenured Prof and Contract Prof) had unique elements and were used as subtypes. This example serves only to show that the supertype/subtype model is not perfectly balanced, and often has components that are necessary due to other factors that benefited the model.

Figure 6.13 Supertype/subtype model without mutual exclusivity.

Figure 6.14 Cascading subtypes.

Cascading subtypes do not need to be of the same relationship. In other words, the subtype educator could have been exclusive to a cascade that is mutually exclusive as follows in Figure 6.15.

Note that the example shows the subtype identifier Professor Types is a validation entity in 3rd normal form (see Figure 6.16).

Supertypes and subtypes must also be normalized following the same rules that govern any entity decomposition. For example, the subtype Educator Types contains elements that are not fully normalized. For example, Grade_Level and Subject in the subtype entity High School Teacher can be validated using a look-up table. Department, School, and PhD_Subject can also be validated. The resulting fully normalized ERD is shown below in Figure 6.17.

Combining User Views

Normalization has concentrated our analysis on the challenges of moving and placing attributes in the correct entity. In each of the normalization examples, a violation of an NF has always resulted in the creation of a new entity. However, experienced analysts must deal with combining user views. In many aspects

Figure 6.15 Cascading subtypes with alternating exclusivities.

this has the opposite result of normalization in that entities will most likely be combined. Combining or joining entities typically occurs when users have separate views of the same data. A better way to comprehend this concept is to remember the lesson of the logical equivalent. Although this lesson focused on processes, we can try to redirect its point to the stored data model. First we must ask, can data elements that have been physically defined differently really be logically the same (or equivalent)? The answer is yes, and it occurs regularly during the analysis process. Let us now use an example to help illustrate this idea:

The Analyst met with Charles on the 15th floor. During the interview, a data store called Clients was created. The Clients data store was made up of the following data elements shown in Figure 6.18.

After the meeting, the Analyst then went to the 19th floor and visited with Mary of another department. During this interview a data store called Customers was created with the data elements listed in Figure 6.19.

In reality, these two entities are both part of the same object. Whether the entity name is Client or Customer, these entities must be combined. The difficulty

Figure 6.16 Supertype/subtype with subtype identifier element.

here is the combining of the identical elements versus those that need to be added to complete the full view of the entity. Charles and Mary, unbeknownst to each other, had similar but different views of the same thing. Their names for the objects were also different. By applying the concept of the logical equivalent, we determined that only one entity should remain (see Figure 6.20).

Finding that two or more entities are really identifying the same object may be difficult, especially when their names are not as similar as the ones used in the above example. It is therefore even more important that the analyst ensure the logical meaning of entities and their component elements. Note that we chose to call the combined entity “Customers” and added to it the unique elements not already stored. However, combining these user views raises a new and ugly issue: Why Customer, not Client? Charles and his staff may not care about the internal name of the entity, but may find the name Customer used in screens and reports to be unacceptable. What do we do? The answer is to provide what is called an Alias entry into the Data Dictionary. This will allow both names to point to the same element and allow each to be used in different screen programs and reports. The use of an Alias is not new, as it has existed as a feature in many programming languages such as COBOL for years. Its usefulness continues, and both Charles and Mary should remain happy. (But don’t tell

Figure 6.17 Fully normalized supertype/subtype ERD.

Figure 6.18 The data store for Clients.

Figure 6.19 The data store for Customers.

Figure 6.20 The combined data store for Clients and Customers.

Charles that the internal name is Customer!) Combining user views will always boost performance of the database, as it reduces the number of entities and the related links to make the connection.

Integration with Existing Models: Linking Databases

We have discussed the challenges of dealing with Legacy Systems. Most firms are approaching the replacement of legacy systems by phasing business area components into completely re-developed systems. As each business area is completed, there needs to be a “Legacy Link” with data files that connect business area components. This strategy allows a gradual porting of the entire system. The problem with the “Legacy Link” is that the normalized databases must interface and in many cases be dependent upon non-normalized files. This effectively upsets the integrity of the new component and may permanently damage the stored data in the new model. The linking of legacy applications is only one example of this problem. Often, subsidiary companies or locations must depend on data being controlled by less dependable master files. Therefore, linking databases may force analysts to rethink how to preserve integrity while still maintaining the physical link to other corporate data components. Let us use the following example to show how this problem arises:

The Analyst is designing a subsystem that utilizes the company’s employee master file. The subsystem needs this information to allocate employees to projects. Project information is never purged, and therefore the employee project file will contain employees who may no longer be active. Unfortunately, the company master deletes all terminated employees. The employee master must be used in order to ensure that new employees are picked up. The subsystem cannot modify any information on the company master. The ERD in Figure 6.21 depicts these relationships:

Note that the Employee Project entity has a one or zero relationship with the Employee Master entity. This simply means that there could be an employee that exists in the Employee Project entity that does not exist in the Employee Master. Not only does this violate normalization rules, it has a serious integrity problem. For example, if we wanted to print a report about the project and each participating employee, all employees who do not exist in the Employee Master will print blanks, since there is no corresponding name information in the master file. Is there an alternative that could provide integrity and normalization? The answer is yes. The subsystem needs to access the Company employee master and merge the file with an Employee Master subsystem version. The merge conversion would compare the two files and update or add new information and employees. It would not, however, delete terminated employees. Although this is an extra step, it maintains integrity, normalization, and most of all, the requirement not to modify the original Employee Master. The ERD would be modified as shown in Figure 6.22.

Figure 6.21 ERD showing an association between Employees and Projects.

Figure 6.22 ERD reflecting a legacy link to the Corporate Employee Master file.

The Corporate Employee Master and its relation to Employee Master is shown only for informational purposes. The Corporate Employee Master in effect becomes more of an application requirement rather than a permanent part of the ERD. It is important to understand that this solution may require the analyst to produce a list of existing projects in which terminated employees are needed. The first conversion from the Corporate Employee Master will not contain these terminated employees, and they will therefore have to be added directly to the subsystem Employee Master.

Referential Integrity

Referential integrity is a feature provided by most databases. The idea behind referential integrity is that it prevents users of the database from corrupting or providing inconsistent data into a table. Normalization, as discussed before, is the vehicle that ensures referential integrity in a database because it stops records from being entered into one table that is not linked to another one (assuming the two tables should be linked by a common data element). Referential integrity is implemented through the use of a foreign key.

So, by the above definition, a 3rd normal form logical model if implemented in the physical database, would establish referential integrity in the product. Everything would seem to be very simple then; however, the reality is that 3rd normal form physical databases are difficult to achieve. By achieve, I mean 100 %, for what is almost integrity? While this book provides all of the steps to accomplish 3rd normal form, there are a number of issues that block us from ever reaching the magical 100 % compliance:

1. Third normal form databases can have performance degradation in a physical implementation. The foreign keys are indexes, and indexes become incrementally slower as they become larger—so large systems tend to run into significant performance problems that necessitate violating the foreign key implementation of referential integrity.

2. Most applications today have not been created from scratch—they are legacy migrations from existing systems. As such, there tend to be a number of applications that contain the database rules within the application code. To change these applications can be a daunting if not overwhelming recoding effort that is likely not to receive either the money or the time requisite to complete such an overhaul effort. This results in new applications replicating the coding that exists and the database is left unprotected, especially when users write their own queries.

3. There is little compatibility of foreign key restraints across proprietary database products. The definition languages and uses of SQL (structured query language) vary among database vendors, and as a result maintaining 3rd normal form across multiple products is very challenging—particularly with respect to having the necessary in-house talent to understand all of the “flavors” of SQL. Furthermore, application vendors, that is, companies that produce products that work with databases, are careful not to be allegiant to any specific database vendor, because their clients have their own preference. As a result, these vendors want to make it as easy as possible for their applications to operate with the database that their client uses. Implementing referential integrity at the database level in these cases would create a maintenance nightmare for these application vendors. The result: most vendors and companies have tended to shy away from putting foreign key controls and restraints in their product because of portability issues.

Database Naming Conventions

How should analysts and designers determine the name of a physical table, field names, and keys in the database? It is important to avoid redundant names such as “Description” which might define a description attribute in a number of different physical databases. For example, tables can often have a “name” field, like the company name, or the contact name, etc. Each of these attributes in turn needs a descriptive field that explains more about the name. So analysts often create a field called “description” in each separate table, but this habit has serious consequences from a data dictionary perspective. Each “description” attribute when entered into the DD creates a replication of the same name—so it creates a confusing situation. Although many CASE products automatically prefix the name with the name of the table, e.g., “Customer.Description” it is important for the analyst/designer to control this with appropriate naming conventions that all engineers of systems can understand and follow. Thus, there are a number of guidelines for providing names—names that assist developers as well as users (particularly those who use SQL) to understand the meanings of various field names in the database.

For the purpose of this book, I will use the most popular Oracle naming conventions as an example of widespread and “standard” database naming conventions.

1. Table Names

Table names should be plural, should not contain spaces, separated by_underscores, and limited to 23 characters. If a table name contains multiple words, only the last word should be plural:

Customers

Customer_Applications

Customer_Application_Functions

2. Field Names

Fields should have a unique name in the database (as opposed to duplicate names in separate tables which is often allowed in most vendor database products). The Oracle field naming conventions are for fields to lead with two or three character contractions of the table name:

Customer_Options would have a field called CO_Vendor_name. Patient_Names would have a field called PN_Patient_Addresses. Vendors would have a field called VE_Telephone_Numbers.

Cases can occur where two or more tables have the same prefix. This can best be avoided by first being careful in the way the analyst names a table, thus avoiding the dilemma. However, in very large tables, let’s say one might consider concatenating another letter—so instead of Customer_Options as CO, the analyst can add another character: COP.

3. Primary Key Fields

Primary key fields should be identified by appending “_pk.” For example:

Customers would have a primary key field called CU_Customer_Id_pk.

Vendors would have a primary key field called VE_Vendor_Id_pk.

When a primary key is used as a foreign key in another table, it should be referred to as “_fk,” omitting “_pk” as follows:

Invoices might have a foreign key called IN_Customer_Id_fk.

In the case on concatenation of fields, forming a primary key, pk should be replaced by “_ck” as follows:

Customer_Id_ck

Customer_Id_Locations_ck

View Naming Conventions

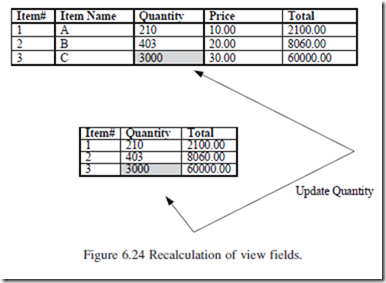

A view is a subset picture of a database table or physical database. During the design stage it is important to determine the number of subset views that may be required. Views can typically be associated with a screen program that is either updating or viewing data. The use of a view is essentially a design decision that has impact or performance. By creating subset views, less data and only data that is relevant from the larger table is stored. The view can best be seen in Figure 6.23.

The downside of a view is that it replicates physical data, and as such, requires more manipulation to ensure data integrity. Thus, if a view is used for update, then the changing of data must be re-applied to the superset table as follows:

Update Quantity in Item 3 to 3000 requires two updates Note that a recalculation to Total of 90000 is also required in both tables.

Therefore, one can take the position that views are best used when referential integrity is not necessary—as it would not be when just retrieving data for viewing only. For this reason, views can be very effective in data warehousing operations (see Figure 6.24).

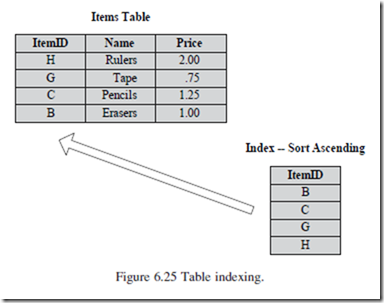

Indexing in Views

Once the logical database is designed by identifying the primary, secondary, and foreign keys in the logic data model, indexing can be used in the Design Stage to incorporate specific query activity in the physical database. Separate indexes allow for sorting without moving the physical data records as shown in Figure 6.25:

Physical indexes are produced either in the primary key (see 1st normal form failure primary key propagation) or as a foreign key (see 3rd normal form failure). The primary key indexes have certain naming conventions that are important for the analyst/designer using the format “idx_<TableName>_pk.” For example.

PATIENTS would have a primary key index called “idx_patients_pk.”

If the primary key is a series of concatenations, each sequential concatenation would be named as follows:

idx_patients_01 idx_patients_02 and so forth.

Foreign keys are typically called “constraints” and are represented as follows:

PATIENTS would have a foreign key called “fk_patients”, where “patients” is the name of the Table or TableName.

There are also some other conventions that apply to non-key attributes, partic- ularly those that carry a Boolean or Yes/No value. These non-key attributes usually end in “_yn”. For example:

acceptance_status_yn is an attribute that contains either a “yes” value or “no” value.

Field Length and Character Conventions

There are also maximum length naming conventions that are published and vary by database vendor. Figure 6.26 contains recommended maximum length names for databases created using IBM (DB2 database), Microsoft (SQLServer database), and Oracle (Gulutzan and Pelzer, 1999).

The comprising length from the above table appears to be 30, so I recommend that analysts use this number as their maximum assignment of any database names.

Allowable Characters in a Name

What are the acceptable characters that can be used to comprise a name field? Once again, the recommendations and limitations vary by database vendor as depicted in Figure 6.27 (Note: I have added MY_SQL, which is a popular open source database product):

In general, it is acceptable that the first characters of an attribute name can be a letter and that subsequent characters may contain digits or “_” (an underscore).

$, #, @, while allowed, may not be compatible across all database products.

Delimited Identifiers

Delimiters are used as a way to define the beginning and end of a field name. They are, in effect, the character that is allowed to act as the field that designates the beginning and end of such a field name. Delimiters are extremely useful when a named field includes spaces. The most popular delimiters are “”. For example:

Create table “art langer”

The above delimiter “” defines that the space between “t” and “l” is part of the table name. Figure 6.28 shows the requirements by database vendor:

Null Values

There are often many questions raised about what Null is. What does it mean to have a null attribute? Perhaps the first thing to discuss is what Null is not! Null is not a value, zero, or spaces. The definition of Null is “does not exist.” That is, a value is not provided or the value is unknown. Unfortunately, null is often misused by database professionals. Perhaps a good example of appropriate use of null is the field “Employee_Termination_Date.” This field would be defined as a date value. However, if an employee was currently employed, he/she would not have an “Employee_Termination_Date.” Therefore, the field will “not exist” for active employees. This requires the data definition of “Employee_Termination_Date” to allow for a null assignment since any date

value would be fictitious and actually create an integrity problem (by assigning a date that would likely be out of range or bogus).

Nulls should never be used as a key-field identifier, either in a primary, secondary, or foreign key. This would also suggest that “Employee_Termination_ Date” should never be used as a key-field identifier. Popular database products allow for the specification of a column to not permit null assignment. This feature obviously helps maintain data integrity by ensuring that an attribute in a row will always contain data. From a physical application perspective, this means that a user would be required to enter a value for a specified field in an entry screen.

Another complication of using null is the results that can be rendered when they are involved in complex database queries. While most advanced database products handle queries on nulls, the Boolean results from these queries can vary and result in unexpected output and aggregations. The details of how this occurs are beyond the scope of this book, but I suggest care when using them for database reporting needs.

Denormalization

Because of referential requirements, many databases need to be denormalized. At the beginning of this chapter, I identified that denormalization was the eighth and final step in the LDM. Denormalization is a reality, but a process that needs to be carefully measured in the exposure it creates for integrity—they just go hand-in-hand together.

Analysts/designers who consider denormalizing should weigh the value between time vs. space trade-offs. Normalized databases are not designed to minimize access time, and as a result they do not perform particularly efficiently when using tables to perform simple tasks like printing reports. This is especially true when such reports need data from multiple tables that are connected by normalized links like foreign keys. For example, if a report analyzing Order Items was required by a user, the normalized database would have the following tables linked in Figure 6.29:

Thus, the report would need to access both tables for each search—causing degradation in access times. Alternatively, a denormalized version would only have one table as shown in Figure 6.30:

This table concatenates Order with Item and eliminates the link. While this denormalized architecture has great advantages in the identified application, it can also have the opposite advantage if the report just needed to list each Order number. This technique is often called combining tables. In this case, the application would need to read every record and contain logic that would print the next Order Number that did not match the prior one. So as one can see by this example, electing denormalization is very tricky indeed. An alternative approach would be to create a data warehouse which maintains the integrity, but produces table structures for reporting only applications. This is covered more in-depth in Chapter 13, Business Process Re-Engineering.

So while time may be enhanced via denormalization, space may suffer. Given that denormalization creates replication of data, there are more records stored and therefore more space required. While normalized databases need significant storage during data manipulations, that is, producing a report, this space need is temporary, meaning that the space is released after the program completes. With denormalized databases, the storage is permanent because the data is stored in a more “report-ready” mode. Because storage is much cheaper today than in the past, many database analysts are not concerned with data storage and tend to ignore the consequences in the design stage. Those consequences usually relate to longer backup and restore times, and the need for multiple disk arrays to handle massive databases that span multiple physical hardware devices. Multiple device addressing will inevitably also cause time degradation, so as you can see excessive space requirements may ultimately negate what analysts/designers are attempting to originally improve.

Analysts and designers must ultimately ask themselves what denormalization can do for them. Below are some key questions that should be asked before considering using denormalization:

• Are their alternative ways to achieve better performance than denor- malizing tables?

• Will denormalization get the database to the objective performance level?

• What reliability and data quality exposure will occur from the denormalization and are those exposures acceptable and understood by all stakeholders?

The answers to the above questions may provide important guidance to what the analysts should recommend in their functional analysis and design of the database. Given that performance is a key driver to denormalization decisions, the following indicators may also provide useful guidelines for making effective decisions (Mullins, 2006):

1. A number of queries and reports heavily rely on data from more than one database.

2. Multiple repeating groups (e.g., ORDER—ITEMS as shown above) exist and there are many queries and reports that need to show them together.

3. Many calculations are needed across multiple tables before a query can be completed (that is, the query must first do the calculation before a report can be produced).

4. Multiple access to specific tables is required by many different users for different operations.

5. Large concatenated keys become so large that foreign key connections become lengthy.

Logic to Physical Databases

This chapter thus far has covered the analytics and complexities of designing a logical database schema. Features of the logical model have been:

1. Entities and relationships.

2. Definitions of attributes within entities.

3. The assignment of primary and secondary keys.

4. Foreign key definition in 3rd NF.

5. Normalization.

6. Supertype/Subtype relationships.

At the above level, analysts do the best they can to describe data generally, that is, without regard to how the database will “physically” be implemented. There is a need, however, to specify the logic model in terms of its physical implementation. By physical we mean the actual real database that the schema will be implemented in—so once the analysts know the physical database (this “knowing” will vary, but is often known early in the analysis process), then they need to provide a “physical” data model which should include:

1. Specification of all logical tables and columns to their actual database specification.

2. Identified actual foreign key names and link conventions.

3. Denormalization needs based on physical database constraints or limitations which cause the ERD to become very different than originally constructed.

Therefore, the analysts need to specify how the LDM will be transformed into an actual database schema. This transformation, as with all analytics, must provide an audit trail as to how the analyst made the decisions. This audit trail requires the following four steps:

1. Convert the logical entities into actual database tables.

2. Convert logical relationships into actual product-based foreign-key links often called “constraints.”

3. Convert attributes into physical columns.

4. Modify the physical data model the formats required by the database product.

Notwithstanding the possibility of differences between the logical and physical database, the further the analysts drifts from the LDM, the increased risks of integrity exposures. Perhaps the most important statements about this dilemma have been published in March 2002 by Fabian Pascal. Pascal published what he called the “first five quotes” as follows:

1. “The more you drift away from any physical implementation, the more performance is going to suffer ... The choice is between best logical structure or best physical structure, or a compromise.”

This is pure falsehood. The performance of a database is based on many things, including how the data is stored, access paths, and hardware configuration to name a few—yet many believe the above statement.

2. “Ironically, there is really no difference between a document and a database – In both cases, you have to abstract information and a certain amount of metadata that helps the system understand the meaning and uses of that extracted information ... XML could put an end to that by breaking down the traditional barriers between document and database processing. Interactive Web applications have characteristics of both ... Running an auction on a Web site is a massive database challenge ... But it’s also a massive document processing challenge, because you have to offer all the descriptions of all the products and so on.”

Once again, there is no logic to this statement. A database is much different than a document and has more complex archi- tecture and logic in the way it is designed. Overgeneralization about database relationships with Web-enabled infrastructure can be very misleading.

3. “I am designing a database system but I am a little unsure about normalization–could anyone tell me if the information is in third normal form? If it isn’t could anyone suggest where I’ve gone wrong?"

Normalization is a mathematical process of functional decomposition. One cannot just look at the tables, but needs

to understand the definition of dependencies as I have outlined earlier in this chapter. The only way is to apply the formula.

4. “I am having difficulty creating a table with one of the columns in a composite primary key being NULL[able]. Example: table ABC with columns in the PK ’ A’ NOT NULL, ’ B’ NOT NULL, and ’ C’ NULL. Logically, I have a valid business reason for wanting to implement a table with at least one column of the compound PK being nullable.”

By definition you should never have a Null key—it simply makes no sense.

5. “In creating a database, normalization is the process of organizing it into tables in such a way that the results of using the database are always unambiguous and as intended. Normalization may have the effect of duplicating data within the database and often results in the creation of additional tables. (While normalization tends to increase the duplication of data, it does not introduce redundancy, which is unnecessary duplication.) Normalization is usually a refinement process after the initial exercise of identifying the data objects that should be in the database, identifying the relationships and defining the tables required and the columns within each table.”

Nothing could be further from the truth. Normalization does not propagate redundancy, but rather removes it. All links are accomplished through pointers or indexes which provide the referential integrity needed in complex database architec- tures.

In summary, the most important aspect of the physical model has everything to do with the actual database product and the hardware being used, as opposed to false generalizations of things that must be changed from the logical model. There is also confusion about the term “conceptual model.” The conceptual model is a view of the data from a business perspective or view. This model is designed so that the user community can understand how the database is being constructed and can comment on its accuracy, especially with regard to relations of entities. However, the conceptual model is not an architecture that can be implemented. For example, the conceptual model allows many-to-many relationships to be depicted because it is not an engineering model—so that showing these types of relationships helps users understand and agree to the way the entities relate to each other. Thus the logical model is formed from the conceptual design but employs the rules of information science. The physical model is then transformed based on the vendor requirements for implementation and hardware features where the database will ultimately reside and the way indexes and keys are actually constructed by the product itself.

Data Types Usage and Conventions

There are a number of alphanumeric data types to use when defining an attribute or column in a table. Varchar2, which was used earlier in this chapter, is the preferred alphanumeric data type for alphanumeric fields. The main benefit of Varchar2 over Char is that Char will store blanks in characters not being used—therefore actually using more storage. For example, Name is defined as 30 varying length characters, thus meaning that its value could be from 1 to 30 characters in length. If this field is defined as Varchar2 and a value of 20 characters is stored, then only 20 characters of space will be used. On the other hand, if the same field was defined as Char 30, then the same example would require 30 characters; 20 of actual data definition, and 10 of blanks to fill the definition field.

However, the use of Char is important when logical comparisons are made between fields where trailing blanks are not considered as part of the comparison (this is an American National Standard Institute (ANSI) requirement. In these cases using Char is more efficient because the comparisons are accomplished on the same size fields. A comparison is commonly used in sorting data for example. If trailing blanks are considered in the comparison, then the Char definition is not viable. Figure 6.31 shows a comparison of ANSI standards with Oracle.

Business Rules

The growth of the relational model has created a mechanism for storing certain application logic at the database level. Business rules are application logic that enforces the integrity of the business, that is, that maintains the rules as set forth by the users. Such rules could include: If Last_Name is entered, the First_Name must also be entered. This “rule” simply means that a valid name must contain both the first and last name of the individual being entered into the database. These business rules were traditionally part of the application program code. This meant that every program that would need to

enforce a rule would need to encapsulate the same logic within each application program. What followed historically was a maintenance nightmare which required programmers to remember where the logic had been used, especially when changes to the code were required. In addition, there was always the issue of re-coding the same logic without error, so it meant greater testing time. Although there were and are techniques for storing common code in global libraries for applications to incorporate into the code, the procedures tended to be archaic and awkward to support. The establishment of SQL as a robust and end-user query tool also posed a new problem. Business rules coded in applications can enforce them only if the program is executed. Because SQL allows users to create and execute query sessions, they can easily avoid an applications enforcement of a business rule. This therefore created a serious integrity problem in the implementation of database products. Although the tendency in the industry has been to separate data from applications, we will see here that the industry is moving back towards combining data and applications again. It is important not to view this as a return to the old way, but rather as a more intelligent and structured way to combine data with its permanent logic. The word permanent is crucial: certain logic is really an inherent part of the relationship that elements have with other elements. Having business rules stored as part of the data, then, allows anyone to use the information without violating the permanent relationship rules as set forth by the business. It means that SQL users can query all they want or even create and modify data without losing the controls necessary to support integrity.

Business rules are implemented at the database level via stored procedures. Stored procedures are implemented by each database manufacturer, and although they are similar, they are not the same as business rules. Therefore, moving stored procedures from one database to another is not trivial. Why do we care? Networks are being built around the concept of client/server computing and may often require communication among many different database vendor systems. If business rules are to be implemented at the database level, the compatibility and transportability of such code becomes a challenge. This issue will be discussed in greater detail throughout the later chapters of this book.

Business-rule implementations fall into three categories: keys, domains, and triggers. Key business rules are concerned with the behavior of a primary key in an entity. They include the rules that can affect the insertion, deletion, and updating of primary and foreign keys. For example, if an order is deleted, all order items must also be deleted automatically. Many people call this feature referential integrity. Domains represent the constraints related to an attribute’s range of values. If an attribute (key or non-key) can have a range of values from 1 to 10, we say that range is the domain value of the attribute. This is, of course, very important information to be included and enforced at the database level through a stored procedure. The third and most powerful business rule is triggers.

Triggering Operations

Triggers are defined as stored procedures that, when activated, “trigger” one or a set of other procedures to be executed. Triggers usually act on other entities, although in many databases such as Oracle, triggers are becoming powerful programming tools to provide significant capabilities at the database level. In many ways they represent their own programming languages and allow embedded SQL code to be used in the stored procedure. Stored procedures resemble BAT files in DOS and are actually implemented as an option in many RDBMS packages. Below is an example of an Oracle 8 trigger:

This trigger is designed to allow a company’s information to be marked as confidential only by the President. This means that the president of the company can enter information that only he or she can see. The second part of the trigger is set to allow certain executives to mark their contacts with companies as private. Here we see two sets of application logic that will execute via Oracle triggers. In addition, it will be enforced by the database regardless of how the information is accessed.

Too much power can be a problem, however, and it can cause difficulties with triggers. Why? Because triggers can initiate activity among database files, designers must be careful that they do not cause significant performance problems. For example, let’s say a trigger is written which accesses 15 database files. If this trigger is initiated during a critical processing time in the firm, major problems with productivity could result. Once again, the good and the bad!

The subject of business rules is broad but very specific to the actual product implementation style. Since analysts should remain focused on the logical model, it is important for them to define the necessary key business rules, domains and triggers required by the system. It should not be their responsibility to implement them for a specific Relational Database Management Software (RDBMS) product.

Problems and Exercises

1. What is logic data modeling trying to accomplish?

2. Define Normalization. What are the three normal forms?

3. What does normalization not do?

4. What is meant by the term “derived” data element?

5. Describe the concept of combining user views. What are the political ramifications of doing this in many organizations?

6. What are legacy links? Describe how they can be used to enforce data integrity.

7. Name and define the three types of business rules.

8. Why are stored procedures in some ways a contradiction to the rule that data and processes need to be separated?

9. What are the disadvantages of database triggers?

10. What is meant by denormalization? Is this a responsibility of the analyst?

11. Define a supertype/subtype. When should this entity construct by used?

Mini-Project #1

The Physician Master File from a DFD contains the following data elements:

Assumptions

1. A physician can be associated with many hospitals, but must be associated with at least one.

2. A physician can have many specialties, or have no specialty.

Assignment

Normalize to 3rd normal form.

Mini-Project #2

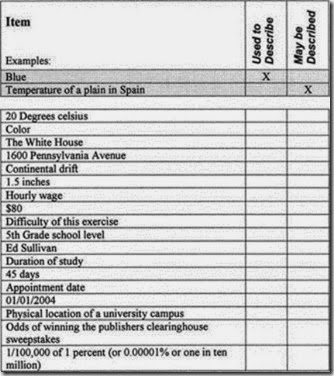

1. Check the appropriate column that best identifies the use of each named item as to whether it describes an element or whether it is an element.

2. For the following list of named entity types, provide a natural and unique identifier that provides significant information. Where no natural unique indentifier exists, create a new one and describe it

Exercise provided by Greg Vimont.

Mini-Project #3

Crow’s-Foot Exercise

1. (a) What is the total number of possible pairings of crow’s-foot indicators?

How many mirror image pairs exist in these possible combi- nations (like matching bookends)?

(b) How many unique pairs exist? And if you didn’t count the reversed version of each unique pairing, how many are there?

Hint: Use this table diagram of crow’s-foot indicators to plot all of the possible instances.

2. Draw the crow’s-foot cardinality for the following situation:

A1 Travel takes reservations from clients and provides them with travel arrangements on common carriers throughout the world. For some reservations, tickets are never issued. Reservations can be made and tickets can be issued for them at a later time. Sometimes, when reservations are made one or more tickets are issued simultaneously. A ticket may fulfill only a single reservation. Tickets, once issued, are never reissued to fulfill anything other than the original reservation.

(3) Draw the crow’s-foot cardinality for the following situation:

The Party Palace is a catering facility that hosts many types of social engagements. One type of event is the dinner party. Guests and their respective dates are normally issued seating cards with table assignments. Every guest will have a seating assignment. Not every guest will have a date. Dates may not be unaccompanied, and they must accompany only one guest.

4. Based on the following business case, draw an entity relation diagram.

You need only to name the entities; no keys or attributes are required.

(a) In the operation of its business, Top-Flight Limo Service records information about drivers who chauffeur the company’s patrons. Drivers must own and drive their own cars. A driver may own more than one vehicle. Top-Flight Limo tracks driver traffic citations during the time of their employment with the company. Drivers begin employment with a clean slate.

(b) In the ERD that you have created, what is the functional significance of “optional” vs. “required” as it is applied to business rules in implementing a database and populating it with data?

Exercise provided by Greg Vimont.

Comments

Post a Comment