Process-Based Tools:Data Flow Diagrams

Process-Based Tools

Data Flow Diagrams

Chapter 4 discussed the concept of the logical equivalent, justifying and defining it by showing a functional decomposition example using data flow diagrams (DFD). In this section, we will expand upon the capabilities of the DFD by explaining its purpose, advantages and disadvantages (described later as the Good, the Bad and the Ugly) and most important: how to draw and use it.

Purpose

Yourdon’s original concept of the DFD was that it was a way to represent a process graphically. A process can be defined as a vehicle that changes or trans- forms data. The DFD therefore becomes a method of showing users strictly from a logical perspective how data travels through their function and is transformed. The DFD should be used in lieu of a descriptive prose representation of a user’s process. Indeed, many analysts are familiar with the frustrations of the meeting where users attend to provide feedback on a specification prepared in prose. These specifications are often long descriptions of the processes. We ask users to review it, but most will not have had the chance to do so before the meeting, and those who have, may not recollect all the issues. The result? Users and analysts are not in a position to properly address the needs of the system, and the meeting is less productive than planned. This typically leads to more meetings and delays. More important, the analyst has never provided an environment in which the user can be walked through the processes. Conventional wisdom says that people remember 100 % of what they see, but only 50 % of what they read. The graphical representation of the DFD can provide users with an easily understood view of the system.5

The DFD also establishes the boundary of a process. This is accomplished with two symbols: the terminator (external) and the data store. As we will see, both represent data that originate or end at a particular point.

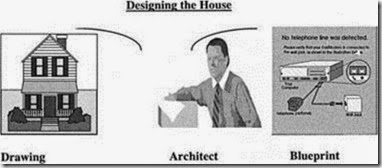

The DFD also serves as the first step toward the design of the system blueprint. What do we mean by a blueprint? Let us consider the steps taken to build a house. The first step is to contact a professional who knows how to do design. We call this individual an architect. The architect is responsible for listening to the homebuilder and drawing a conceptual view of the house that the homebuilder wants. The result is typically two drawings. The first is a prototype drawing of the house, representing how the house will appear to the homebuilder. The second is the blueprint, which represents the engineering requirements. Although the homebuilder may look at the blueprint, it is meant primarily for the builder or contractor who will actually construct the house. What has the architect accomplished? The homebuilder has a view of the house to verify the design, and the builder has the specifications for construction.

Let us now translate this process to that of designing software. In this scenario, the analyst is the architect and the user is the homebuyer. The meeting between the architect and the homebuyer translates into the requirements session, which will render two types of output: a business requirements outline and prototype of the way the system will function, and a schematic represented by modeling tools for programmers. The former represents the picture of the house and the latter the blueprint for the builder. Designing and building systems, then, is no different conceptually from building a house. The DFD is one of the first—if not the first—tools that the analyst will use for drawing graphical representations of the user’s requirements. It is typically used very early in the design process when the user requirements are not clearly and logically defined.

Figures 5.1 and 5.2 show the similarities between the functions of an architect and those of an analyst.

Figure 5.1 The interfaces required to design and build a house.

Figure 5.2 The interfaces required to design and build a system.

How do we begin to construct the DFD for a given process? The following five steps serve as a guideline:

1. Draw a bubble to represent the process you are about to define.

2. Ask yourself what thing(s) initiate the process: what is coming in?

You will find it advantageous to be consistent in where you show process inputs. Try to model them to the left of the process. You will later be able to immediately define your process inputs when looking back at your DFD, especially when using them for system enhancements.

3. Determine the process outputs, or what things are coming out, and model them to the right of the process as best you can.

4. Establish all files, forms or other components that the process needs to complete its transformation. These are usually data stores that are utilized during processing. Model these items either above or below the process

5. Name and number the process by its result. For example, if a process produces invoices, label it “Create Invoices.” If the process accomplishes more than one event, label it by using the “and” conjunction. This method will allow you to determine whether the process is a functional primitive. Ultimately, the name of the process should be one that most closely associates the DFD with what the user does. Therefore, name it what the user calls it! The number of the process simply allows the analyst to identify it to the system and most important to establish the link to its children levels during functional de- composition.

Let us now apply this procedure to the example below:

Vendors send Mary invoices for payment. Mary stamps on the invoice the date received and matches the invoice with the original purchase order request. Invoices are placed in the Accounts Payable folder. Invoices that exceed thirty days are paid by check in two- week intervals.

Step 1: Draw the bubble (Figure 5.3)

Figure 5.3 A process bubble.

Step 2: Determine the inputs

In this example we are receiving an invoice from a Vendor. The Vendor is considered a Terminator since it is a boundary of the input and the user cannot control when and how the invoice will arrive. The invoice itself is represented as a data flow coming from the Vendor terminator into the process as shown in Figure 5.4:

Figure 5.4 Terminator sending invoice to the process.

Step 3: Determine the outputs of the process

In this case the result of the process is that the Vendor receives a check for payment as shown in Figure 5.5:

Figure 5.5 DFD with output of check sent to vendor.

Step 4: Determine the “processing” items required to complete the process

In this example, the user needs to:

• match the invoice to the original purchase order;

• create a new account payable for the invoice in a file; and

• eventually retrieve the invoice from the Accounts Payable file for payment.

Note that in Figure 5.6 the Purchase Order file is accessed for input (or retrieval) and therefore is modeled with the arrow coming into the process. The Accounts Payable file, on the other hand, shows a two-sided arrow because entries are created (inserted) and retrieved (read). In addition, arrows to and from data stores may or may not contain data flow names. For reasons that will be explained later in the chapter, the inclusion of such names is not recommended.

Figure 5.6 DFD with interfacing data stores.

Step 5: Name and number the process based on its output or its user definition.

Figure 5.7 Final named DFD.

The process in Figure 5.7 is now a complete DFD that describes the event of the user. You may notice that the procedures for stamping the invoice with the receipt date and the specifics of retrieving purchase orders and accounts payable information are not explained. These other components will be defined using other modeling tools. Once again, the DFD reflects only data flow and boundary information of a process.

The DFD in Figure 5.7 can be leveled further to its functional primitive. The conjunction in the name of the process can sometimes help analysts to discover that there is actually more than one process within the event they are modeling. Based on the procedure outlined in Chapter 4, the event really consists of two processes: Recording Vendor Invoices and Paying Vendor Invoices. Therefore, P1 can be leveled as shown in Figure 5.8.

Figure 5.8 Leveled DFD for Record and Pay Invoices process.

Advantages of the DFD

Many opponents of the DFD argue that this procedure takes too long. You must ask yourself: What’s the alternative? The DFD offers the analyst several distinct advantages. Most fundamentally, it depicts flow and boundary. The essence of knowing boundary is first to understand the extent of the process prior to beginning to define something that may not be complete or accurate. The concept is typically known as top-down, but the effort can also be conceived as step-by- step. The analyst must first understand the boundary and flows prior to doing anything else. The steps following this will procedurally gather more detailed information until the specification is complete.

Another important aspect of the DFD is that it represents a graphical dis- play of the process and is therefore a usable document that can be shown to both users and programmers, serving the former as a process verification and the latter as the schematic (blueprint) of the system from a technical perspective. It can be used in JAD sessions and as part of the business specification documentation. Most important, it can be used for maintaining and enhancing a process.

Disadvantages of the DFD

The biggest drawback of the DFD is that it simply takes a long time to create: so long that the analyst may not receive support from management to complete it. This can be especially true when there is a lot of leveling to be performed. Therefore, many firms shy away from the DFD on the basis that it is not practical. The other disadvantage of the DFD is that it does not model time- dependent behavior well, that is, the DFD is based on very finite processes that typically have a very definable start and finish. This, of course, is not true of all processes, especially those that are event driven. Event-driven systems will be treated more thoroughly when we discuss State Transition Diagrams later in this chapter.

The best solution to the time-consuming leveling dilemma is to avoid it as much as possible. That is, you should avoid starting at too high a summary level and try to get to the functional primitive as soon as you can. If in our example, for instance, the analyst had initially seen that the DFD had two processes instead of one, then the parent process could have been eliminated altogether. This would have resulted in an initial functional primitive DFD at Level 1. The only way to achieve this efficiency on a consistent basis is through practice. Practice will eventually result in the development of your own analysis style or approach. The one-in, one-out principle of the logical equivalent is an excellent way of setting up each process. Using this method, every event description that satisfies one-in, one-out will become a separate functional primitive process. Using this procedure virtually eliminates leveling. Sometimes users will request to see a summary-level DFD. In these cases the functional primitives can be easily combined or “leveled-up.”

Process Flow Diagrams

While the data flow diagram provides the analyst with the LE of a process, it does not depict the sequential steps of the process, which can be an important piece of information in analyzing the completeness of a system. Understanding the sequence of steps is especially important in situations where the information gathered from users is suspect or possibly inaccurate. A process flow diagram (PFD) can reveal processes and steps that may have been missed, because it requires users to go through each phase of their system as it occurs in its actual sequence. Having users go through their process step by step can help them remember details that they might otherwise have overlooked. Therefore,

using process flows provides another tool that can test the completeness of the interview and LE.

Because of this advantage of the PFD, many methodologies, such as the James Martin technique,6 suggest that analysts should always use PFDs instead of DFDs. However, it is my opinion that these two models not only can coexist but may actually complement each other. This section will focus on how a process model is implemented and balanced against a DFD.

What Is a PFD?

PFDs were derived originally from flowcharts. Flowcharts, however, while they are still valuable for certain diagramming tasks, are too clumsy and detailed to be an effective and structured modeling tool. The PFD represents a more streamlined and flexible version of a flowchart. Like a DFD, the PFD suggested here contains processes, externals, and data flows. However, PFDs focus on how externals, flows, and processes occur in relation to sequence and time, while DFDs focus only on the net inputs and outputs of flows and the disposition of data at rest.

The diagram on the following page is an example of a process flow.

Figure 5.9 Sample process flow diagram.

The above figure shows how a PFD depicts flows from one task to another. As with a DFD, externals are shown by a rectangle. Flows are labeled and represent information in transition. Unlike DFDs, process flows do not necessarily require labeling of the flow arrows, and the processes themselves reflect descriptively what the step in the system is attempting to accomplish, as opposed to necessarily defining an algorithm.

Perhaps the most significant difference between a DFD and a process flow is in its integration as a structured tool within the data repository. For example, a labeled data flow from a DFD must create an entry into the data dictionary or repository. Flow arrows from PFDs do not. Furthermore, a functional primitive data flow points to a process specification; no such requirement exists in a process flow. Figure 5.10 represents a DFD depiction of the same process shown in Figure 5.9 above.

Figure 5.10 PFD in DFD format.

Note that the PFD and the DFD share the same boundaries of externals (i.e., Marketing and Management). The DFD flows, however, must be defined in the data dictionary or repository and therefore contain integrated formulas. The other notable difference lies in the interface of the data store “Design Information,” which will eventually be defined as an entity and decomposed using logic data modeling techniques.

What are the relative benefits of using a PFD and a DFD? The answer lies in the correspondence between the process rectangle and the DFD process bubble. Process rectangles actually represent sequential steps within the DFD. They can be compared to an IF statement in pseudocode or a simple statement to produce a report or write information to a file. The PFD, in a sense, can provide a visual depiction of how the process specification needs to be detailed. One functional primitive DFD can thus be related to many process rectangles.

Based on the above analysis, therefore, a PFD can be justified as a tool in support of validating process specifications from within a DFD. In addition, the PFD might reveal a missing link in logic that could result in the creation of a new DFD. Based on this argument, the process flow can be balanced against a DFD and its functional primitives. Finally, the PFD has the potential to provide a graphic display of certain levels of logic code. The corresponding PFD for the DFD shown in Figure 5.8 is illustrated in Figure 5.11.

Figure 5.11 PFD and DFD cross-referenced.

Sequence of Implementation

If both a DFD and a PFD are to be created, which should be done first? While the decision can be left to the preference of the analyst, it is often sensible to start with the DFD. A DFD can be created very efficiently, and it is useful to be able to compare the DFD children against the sequential flow of the PFD. Creating the DFD first also supports the concept of decomposition, since the PFD will inevitably relate to the process specification of a functional primitive DFD. While this approach seems to be easier in most cases, there is also justification for creating a PFD first, especially if an overview flow diagram provides a means of scoping the boundaries of an engineering process.

Data Dictionary

The data dictionary (DD) is quite simply a dictionary that defines data. We have seen that the data flow diagram represents a picture of how information flows to and from a process. As we pick up data elements during the modeling design, we effectively “throw” them into the data dictionary. Remember, all systems are comprised of two components: Data and Processes. The DD represents the data portion.

There is a generic format followed by analysts, called DD notation. The conventional notations are listed below:

Equivalence (=):

The notion of equivalence does not express mathematical equality but rather indicates that the values on the right side of the equal sign represent all the possible components of the element on the left.

Concatenation (+):

This should not be confused with or compared to “plus”; it is rather the joining of two or more components. For example: Last_Name + First_Name means that

these two data elements are being pieced together sequentially.

Either/Or with Option Separator ([ / ]):

Means “one of the following” and therefore represents a finite list of possible values. An example would include the definition of Country =

[USA/Canada/United Kingdom/etc.].

Optional ():

The value is not required in the definition. A very common example is middle initial: Name = Last_Name + First_Name + (Middle_Init).

Iterations of { }:

The value between the separators occurs a specific or infinite number of times. Examples include:

1{ X }5 The element “X” occurs from one to five times 1{ X } The element “X” occurs from one to infinity 3{ X }3 The element “X” must occur three times.

Comments (∗∗)

Comments are used to describe the element or point the definition to the appropriate process specification should the data element not be definable in the DD. We will explain this case later in this section.

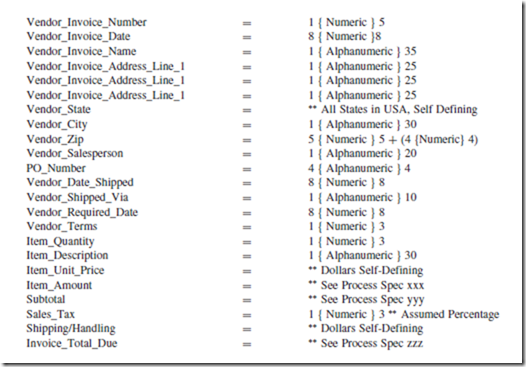

Now that we have defined the components of the DD, it is important to expand on its interface with other modeling tools, especially the DFD. If we recall the example used earlier to describe drawing a DFD, the input data flow was labeled “Vendor_Invoice” (see Figure 5.4). The first DD rule to establish is that all labeled data flow names must be defined in the DD. Therefore, the DFD interfaces with the DD by establishing data element definitions from its labeled flows. To define Vendor_Invoice, we look at the physical invoices and ascertain from the user the information the system requires. It might typically be defined as follows:

The above example illustrates what a vendor invoice would usually contain and what is needed to be captured by the system. Note that Vendor_Invoice is made up of component data elements that in turn need to be defined in the DD. In addition, there is a repeating group of elements { Item-Quantity + Item_Description + Item_Unit_Price + Item_Amount } that represents each line item that is a component of the total invoice. Since there is no limit to the number of items that could make up one invoice, the upper limit of the iterations clause is left blank and therefore represents infinity. Figure 4.12 is a sample of how the physical invoice would appear.

It should be noted that many of the DD names have a qualifier of “Vendor” before the field name. This is done to ensure uniqueness in the DD as well as to document where the data element emanated from the DFD. To ensure that all data elements have been captured into the DD, we again apply functional decomposition, only this time we are searching for the functional primitive of the DD entry. We will call this functionally decomposed data element an Elementary Data Element. An Elementary Data Element is therefore an element that cannot be broken down any further. Let us functionally decompose Vendor_Invoice by looking to the right of the definition and picking up the data elements that have not already been defined in the DD. These elements are:

The following rules and notations apply:

1. A self-defining definition is allowed if the data definition is obvious to the firm or if it is defined in some general documentation (e.g., Standards and Reference Manual). In the case of Vendor_State, an alternative would be: Vendor_State = [AL/NY/NJ/CA.. ., etc.] for each state value.

2. Data elements that are derived as a result of a calculation cannot be defined in the DD but rather point to a process specification where they can be properly identified. This also provides consistency in that all calculations and algorithms can be found in the Process Specification section.

3. Typically values such as Numeric, Alphanumeric, and Alphabetic do not require further breakdown unless the firm allows for special characters. In that case, having the exact values defined is appropriate.

4. An iteration definition, where the minimum and maximum limit are the same (e.g., 8 { Numeric } 8), means that the data element is fixed (that is, must be 8 characters in length).

The significance of this example is that the DD itself, through a form of functional decomposition, creates new entries into the DD while finding the elementary data element. A good rule to follow is that any data element used in defining another data element must also be defined in the DD. The DD serves as the center for storing these entries and will eventually provide the data necessary to produce the stored data model. This model will be produced using logic data modeling (discussed in Chapter 6).

Figure 5.12 Sample invoice form.

SQL Data Types

Relational database products define data elements using a construct called structured query language (SQL). SQL was originally created by IBM as a standard programming language to query databases. SQL quickly became the de facto standard in the computer industry, and while the generic data types used above are useful in understanding the pure definition of data elements, database analysts use SQL data types to define data elements. The principal SQL data types are as follows:

Alphanumeric or Character Data Elements

These elements can be defined in two ways: (1) as a fixed-length string; or (2) as a variable-length string. The word “string” means that there is a succession of alphanumeric values that are concatenated to form a value. For example, the string “LANGER” is really equivalent to five iterations of different alphanumeric characters that are connected, or concatenated, together. A fixed-length alphanumeric data type is denoted by CHAR(n), where n is the size, or qualifier, of the element. Fixed length means that the size of the data element must always be n. This correlates to the generic definition of n { alphanumeric } n, which states that the lower and upper limits of the iteration are the same. For example, if the data element INVOICE was defined as a fixed-length string of seven characters, its SQL definition is defined as CHAR(7).

Variable alphanumeric fields, on the other hand, relate to strings that can vary in size within a predefined string limit. This means that the size of the string can fall anywhere between one character and its maximum size. The SQL data type definition of a variable-length field is VARCHAR2 (n),7 where n represents the maximum length of the string. If the data element “LAST_NAME” was defined as having up to 35 characters, its SQL data definition would be VARCHAR2(35). This SQL data definition is equivalent to 1{alphanumeric}35.

Numbers or Numeric Data Elements

These elements can be defined in various ways. Let’s first look at the representation of a whole number in SQL. Whole numbers are known mathematically as integers. SQL supports integers using the INTEGER(n) format, where n is the number of digits in the whole number. However, as is so often available with SQL definitions, there is an alternative way of specifying an integer using the NUMBER clause. Thus NUMBER(n) will also store a whole number value up to n positions in length. The equivalent representation using the generic format is n{number}n.

Real numbers, or those that contain decimal positions, cannot be defined using the INTEGER clause but can be defined using NUMBER. For example, a number that contains up to 5 whole numbers and 2 decimal places would be defined as NUMBER(7,2). Note that the first digit represents the total size of the field, that is, 5 whole digits plus 2 decimal positions, which equals 7 positions in total. The number “2” after the comma represents the number of decimal places after the integer portion of the number. The second position preceding the comma represents the number of places from the total digits that are assumed to be decimal positions. The word assumption is important here in that it means that an actual decimal point (.) is not stored in the data type. An alternative notation is NUMERIC. NUMERIC, as opposed to NUMBER, can be used only to define real numbers. However, it uses the same format as the NUMBER datatype to define a real number: NUMERIC (7,2). Depicting real numbers using the generic

format is possible but tricky. The generic specification would be (1{number}5) + 2{number}2. Note that the first part of the definition, the whole number portion, is defined within optional brackets. This is done to allow for the situation where the number has only a decimal value, such as “.25.”

Special Data Types

SQL offers special notation that makes it easy to depict specialized data definitions, like date and time. Below are just some examples of the common special notation used:

DATE: denotes a valid date by assigning a 4-digit year. This kind of notation is compliant with year-2000 standards. The validity of the value is handled internally by SQL.

TIME: denotes a valid time using the format hh/mm/ssss, where hh is two digits of the hour; mm is two digits of the minute, and ssss are four digits of the second. This format can vary by SQL product.

TIMESTAMP: denotes the concatenation of the Date and the Time SQL clauses. The combination of the two values provides a unique specification of the exact time, day, and year that something has occured.

FLOAT: denotes the storage of a value as a floating point number. The format is usually FLOAT (n), where n represents the size in digits.

The data element list below shows the definitions from Figure 5.12 in both the generic and SQL formats:

Process Specifications

Many analysts define a process specification as everything else about the process not already included in the other modeling tools. Indeed, it must contain the remaining information that normally consists of business rules and application logic. DeMarco suggested that every functional primitive DFD point to a “Minispec” which would contain that process’s application logic.8 We will follow this rule and expand on the importance of writing good application logic. There are, of course, different styles, and few textbooks that explain the importance to the analyst of understanding how these need to be developed and presented. Like other modeling tools, each process specification style has its good, bad and ugly. In Chapter 3, we briefly described reasons for developing good specifications and the challenges that can confront the analyst. In a later chapter, the art of designing and writing both business and technical specifications will be discussed in detail; here we are concerned simply with the acceptable formats that may be used for this purpose.

Pseudocode

The most detailed and regimented process specification is pseudocode or structured English. Its format requires the analysts to have a solid understanding of how to write algorithms. The format is very “COBOL-like” and was initially designed as a way of writing functional COBOL programming specifications. The rules governing pseudocode are as follows:

• use the Do While with an Enddo to show iteration;

• use If-Then-Else to show conditions and ensure each If has an End-If;

• be specific about initializing variables and other detail processing requirements.

Pseudocode is designed to give the analyst tremendous control over the design of the code. Take the following example:

There is a requirement to calculate a 5 % bonus for employees who work on the 1st shift and a 10 % bonus for workers on the 2nd or 3rd shift. Management is interested in a report listing the number of employees who receive a 10 % bonus. The process also produces the bonus checks.

The pseudocode would be

The above algorithm gives the analyst great control over how the program should be designed. For example, note that the pseudocode requires that the programmer have an error condition should a situation occur where a record does not contain a 1st, 2nd or 3rd shift employee. This might occur should there be a new shift which was not communicated to the information systems department. Many programmers might have omitted the last “If” check as follows:

The above algorithm simply assumes that if the employee is not on the 1st shift then they must be either a 2nd or 3rd shift employee. Without this being specified by the analyst, the programmer may have omitted this critical logic which could have resulted in a 4th shift worker receiving a 10 % bonus! As mentioned earlier, each style of process specification has its advantages and disadvantages, in other words, the good, the bad, and the ugly.

The Good

The analyst who uses this approach has practically written the program, and thus the programmer will have very little to do with regards to figuring out the logic design.

The Bad

The algorithm is very detailed and could take a long time for the analyst to develop. Many professionals raise an interesting point: do we need analysts to be writing process specifications to this level of detail? In addition, many programmers may be insulted and feel that an analyst does not possess the skill set to design such logic.

The Ugly

The analyst spends the time, the programmers are not supportive, and the logic is incorrect. The result here will be the “I told you so” remarks from programmers, and hostilities may grow over time.

Case

Case9 is another method of communicating application logic. Although the technique does not require as much technical format as pseudocode, it still requires the analyst to provide a detailed structure to the algorithm. Using the same example as in the pseudocode discussion, we can see the differences in format:

The above format provides control, as it still allows the analyst to specify the need for error checking; however, the exact format and order of the logic are more in the hands of the programmer. Let’s now see the good, the bad, and the ugly of this approach.

The Good

The analyst has provided a detailed description of the algorithm without having to know the format of logic in programming. Because of this advantage, Case takes less time than pseudocode.

The Bad

Although this may be difficult to imagine, the analyst may miss some of the possible conditions in the algorithm, such as forgetting a shift! This happens because the analyst is just listing conditions as opposed to writing a specification. Without formulating the logic as we did in pseudocode, the likelihood of forgetting or overlooking a condition check is increased.

The Ugly

Case logic can be designed without concern for the sequence of the logic, that is, the actual progression of the logic as opposed to just the possibilities. Thus the logic can become more confusing because it lacks actual progressive structure. As stated previously, the possibility of missing a condition is greater because the analyst is not actually following the progression of the testing of each condition. There is thus a higher risk of the specification being incomplete.

Pre-Post Conditions

Pre-post is based on the belief that analysts should not be responsible for the details of the logic, but rather for the overall highlights of what is needed. Therefore, the pre-post method lacks detail and expects that the programmers will provide the necessary details when developing the application software. The method has two components: Pre-Conditions and Post-Conditions. Pre-conditions represent things that are assumed true or that must exist for the algorithm to work. For example, a pre-condition might specify that the user must input the value of the variable X. On the other hand, the post-condition must define the required outputs as well as the relationships between calculated output values and their mathematical components. Suppose the algorithm calculated an output value called Total_Amount. The post-condition would state that Total_Amount is produced by multiplying Quantity times Price. Below is the pre-post equivalent of the Bonus algorithm:

”

As we can see, this specification does not show how the actual algorithm should be designed or written. It requires the programmer or development team to find these details and implement the appropriate logic to represent them. Therefore, the analyst has no real input into the way the application will be designed or the way it functions.

The Good

The analyst need not have technical knowledge to write an algorithm and need not spend an inordinate amount of time to develop what is deemed a programming responsibility. Therefore, less technically oriented analysts can be involved in specification development.

The Bad

There is no control over the design of the logic, and thus the potential for misunderstandings and errors is much greater. The analyst and the project are much more dependent on the talent of the development staff.

The Ugly

Perhaps we misunderstand the specification. Since the format of pre–post conditions is less specific, there is more room for ambiguity.

Matrix

A matrix or table approach shows the application logic in tabular form. Each row reflects a result of a condition, with each column representing the components of the condition to be tested. The best way to explain a matrix specification is to show an example (see Figure 5.13).

Figure 5.13 Sample matrix specification.

Although this is a simple example that uses the same algorithm as the other specification styles, it does show how a matrix can describe the requirements of an application without the use of sentences and pseudocode.

The Good

The analyst can use a matrix to show complex conditions in a tabular format. Many programmers prefer the tabular format because it is organized, easy to read, and often easy to maintain. Very often the matrix resembles the array and table formats used by many programming languages.

The Bad

It is difficult, if not impossible, to show a complete specification in matrices. The example in Figure 5.13 supports this, in that the remaining logic of the bonus application is not shown. Therefore, the analyst must incorporate one of the other specification styles to complete the specification.

The Ugly

Matrices are used to describe complex condition levels, where there are many “If” conditions to be tested. These complex conditions often require much more detailed analysis than shown in a matrix. The problem occurs when the analyst, feeling the matrix may suffice, does not provide enough detail. The result: the programmer may misunderstand conditions during development.

Conclusion

The same question must be asked again: what is a good specification? We will continue to explore this question. In this chapter we have examined the logic alternatives. Which logic method is best? It depends! We have seen from the examples that each method has its advantages and shortcomings. The best approach is to be able to use them all and to select the most appropriate one for the task at hand. To do this effectively means clearly recognizing both where each style provides a benefit for the part of the system you are working with, and who will be doing the development work. The table in Figure 5.14 attempts to put the advantages and shortcomings into perspective.

Figure 5.14 Process specification comparisons.

State Transition Diagrams

State transition diagrams (STD) were designed to model events that are time- dependent in behavior. Another definition of the STD is that it models the application alternatives for event-driven activities. An event-driven activity is any activity that depends directly on the behavior of a pre-condition that makes that event either possible or attractive. Before going any further, let’s use an example to explain this definition further:

Mary leaves her office to go home for the day. Mary lives 20 minutes’ walking distance from the office. When departing from the office building, she realizes that it is raining very hard. Typically, Mary would walk home; however, due to the rain, she needs to make a decision at that moment about how she will go home. Therefore, an event has occurred during the application of Mary walking home which may change her decision and thus change the execution of this application.

As shown above, we have defined an event that, depending on the conditions during its execution, may have different results. To complete the example, we must examine or define what Mary’s alternatives for getting home.

The matrix in Figure 5.15 shows us the alternative activities that Mary can choose. Two of them require a certain amount of money. All of the alternatives have positive and negative potentials that cannot be determined until executed; therefore, the decision to go with an alternative may depend on a calculation of

probability. We sometimes call this a calculated risk or our “gut” feeling. Is it not true that Mary will not know whether she will be able to find a taxi until she tries? However, if Mary sees a lot of traffic in the street and not many taxis, she may determine that it is not such a good idea to take a taxi.

Figure 5.15 Mary’s event alternatives.

Much of the duplication of the scenario above falls into the study of Artificial Intelligence (AI) software. Therefore, AI modeling may require the use of STDs. The word “state” in STD means that an event must be in a given pre-condition before we can consider moving to another condition. In our example, Mary is in a state called “Going Home From the Office.” Walking, taking a train or taking a taxi are alternative ways of getting home. Once Mary is home she has entered into a new state called “Home.” We can now derive that walking, taking a train or taking a taxi are conditions that can effect a change in state, in this example leaving the office and getting home. Figure 5.16 shows the STD for going home.

Figure 5.16 State transition diagram for going home.

This STD simply reflects that two states exist and that there is a condition that causes the application to move to the “Home” state. When using STDs, the analyst should:

1. identify all states that exist in the system (in other words, all possible permutations of events must be defined),

2. ensure that there is at least one condition to enter every state,

3. ensure that there is at least one condition to leave every state.

The reasons for using STD differ greatly from those for a DFD. A DFD should be used when modeling finite, sequential processes that have a definitive beginning and end. We saw this situation in the previous examples in this chapter. The STD, on the other hand, is needed when the system effectively never ends, that is, when it simply moves from one state to another. Therefore, the question is never whether we are at the beginning or end of the process, but rather the current state of the system. Another example, one similar to that used by Yourdon in Modern Structured Analysis, is the Bank Teller state diagram shown in Figure 5.17.

Although you may assume that “Enter Valid Card” is the start state, an experienced analyst would know better. At any time, the automated teller is in a particular state of operation (unless it has abnormally terminated). The “Enter Valid Card” state is in the “sleep” state. It will remain in this mode until someone creates the condition to cause a change, which is actually to input a valid card. Notice that every state can be reached and every state can be left. This rule establishes perhaps the most significant difference between the DFD and STD. Therefore, a DFD should be used for finite processes, and an STD should be used when there is ongoing processing, moving from one status to another.

Figure 5.17 Bank Teller state transition diagram.

Let us now show a more complex example which emphasizes how the STD can uncover conditions that are not obvious or were never experienced by the user. Take the matrix of states for a thermostat shown in Figure 5.18.

Figure 5.18 Thermostat states.

Figure 5.19 depicts the STD for the above states and conditions.

Figure 5.19 State transition diagram based on the user-defined states.

Upon review, we notice that there are really two systems and that they do not interface with each other. Why? Suppose, for example, that the temperature went above 70 and that the system therefore went into the Furnace Off state, but that the temperature nevertheless continued to increase unexpectedly. Users expect that whenever the Furnace Off state is reached, it must be winter. In the event of a winter heat wave, everyone would get very hot. We have therefore discovered that we must have a condition to put the air on even if a heat wave had never occurred before in winter. The same situation exists for the Air Off where we expect the temperature to rise instead of falling. We will thus add two new conditions (see Figure 5.20).

| Current State | Condition to cause a change in State | New State |

| Furnace Off Air Off | Temperature exceeds 75 degrees Temperature drops below 65 degrees | Air On Furnace On |

Figure 5.20 Additional possible states of the thermostat.

The STD is then modified to show how the Furnace and Air subsystems interface (see Figure 5.21).

Figure 5.21 Modified state transition diagram based on modified events.

Using STDs to discover missing processes (that would otherwise be omitted or added later) is very important. This advantage is why STDs are considered very effective in Artificial Intelligence and Object Oriented analysis, where the analyst is constantly modeling event-driven, never ending processes. We will see further application of STDs in Chapter 11: Object-Oriented Techniques.

Entity Relational Diagrams

The model that depicts the relationships among the stored data is known as an entity relational diagram (ERD). The ERD is the most widely used of all modeling tools and is really the only method to reflect the system’s stored data.

An entity in database design is an object of interest about which data can be collected. In a retail database application, customers, products, and suppliers might be entities. An entity can subsume a number of attributes: product attributes might be color, size, and price; customer attributes might include name, address, and credit rating.10

The first step to understanding an entity is to establish its roots. Since DFDs have been used to model the processes, their data stores (which may represent data files) will act as the initial entities prior to implementing logic data modeling. Logic data modeling and its interface with the DFD will be discussed in Chapter 6, thus the scope of this section will be limited to the attributes and functions of the ERD itself.

It is believed, for purposes of data modeling, that an entity can be simply defined as a logical file which will reflect the data elements or fields that are components of each occurrence or record in the entity. The ERD, therefore, shows how multiple entities will interact with each other. We call this interaction the “relationship.” In most instances, a relationship between two or more entities can exist only if they have at least one common data element among them. Sometimes we must force a relationship by placing a common data element between entities, just so they can communicate with each other. An ERD typically shows each entity and its connections as shown in Figure 5.22:

Figure 5.22 Entity relational diagram showing the relationship between the Orders and Customers entities.

The above example shows two entities: Orders and Customers. They have a relationship because both entities contain a common data element called Customer#. Customer# is depicted as “Key Data” in the Customers entity because it must be unique for every occurrence of a customer record. It is notated as “PK1” because it is defined as a Primary Key, meaning that Customer# uniquely identifies a particular customer. In Orders, we see that Customer# is a primary key but it is concatenated with Order#. By concatenation we mean that the two elements are combined to form a primary key. Nevertheless, Customer# still has its separate identity. The line that joins the entities is known as the relationship identifier. It is modeled using the “Crow’s Foot” method, meaning that the many relationship is shown as a crow’s foot, depicted in Figure 5.22 going to the Order entity. The double vertical lines near the Customer entity signify “One and only One.” What does this really mean? The proper relationship is stated:

“One and only one customer can have one to many orders.”

It means that customers must have placed at least one order, but can also have placed more than one. “One and only One” really indicates that when a Customer is found in the Order entity, the Customer must also exist in the Customer entity. In addition, although a Customer may exist multiple times in the Order entity, it can exist in the Customer master entity only once. This relationship is also know as the association between entities and effectively identifies the common data elements and the constraints associated with their relationship. This kind of relationship between entities can be difficult to grasp, especially without benefit of a practical application. Chapter 6 will provide a step-by-step approach to developing an ERD using the process of logical data modeling.

Problems and Exercises

1. What is a functional primitive?

2. What are the advantages and disadvantages of a data flow diagram?

What is meant by “leveling up”?

3. What is a PFD? Describe its relationship to a DFD.

4. What is the purpose of the data dictionary?

5. Define each of the following data dictionary notation symbols: =, +, [], /, (), { }, ∗∗

6. What is an elementary data element? How does it compare with a functional primitive?

7. Define the functions of a process specification. How does the process specification interface with the DFD and the DD?

8. Compare the advantages and disadvantages of each of the following process specification formats:

• Pseudocode

• Case

• Pre–Post Condition

• Matrix

9. What is an event-driven system? How does the Program Manager in Windows applications represent the behavior of an event driven system?

10. When using an STD, what three basic things must be defined?

11. What is an Entity? How does it relate to a Data Store of a DFD?

12. Explain the concept of an Entity Relational Diagram.

13. What establishes a relationship between two entities?

14. Define the term “association.”

Mini-Project #1

An application is required that will calculate employee bonuses as follows:

1. There are three (3) working shifts. The bonuses are based on shift:

• 1st Shift: 5 % bonus

• 2nd Shift: 10 % bonus

• 3rd Shift: 10 % bonus

2. The bonus is calculated by multiplying the percentage by the annual gross pay of the employee.

3. The employee records are resident on the employee master file, which resides on a hard disk.

4. Bonus checks are produced for all employees.

5. There is a report produced that lists the number of employees who received a 10 % bonus.

Assignment

Draw the PFD.

Mini-Project #2

You have been asked to automate the Accounts Payable process. During your interviews with users you identify four major events as follows:

I. Purchase Order Flow

1. The Marketing Department sends a purchase order (P.O.) form for books to the Accounts Payable System (APS).

2. APS assigns a P.O. # and sends the P.O.-White copy to the Vendor and files the P.O.-Pink copy in a file cabinet in P.O.#.sequence.

II. Invoice Receipt

1. A vendor sends an invoice for payment for books purchased by APS.

2. APS sends invoice to Marketing Department for authorization.

3. Marketing either returns invoice to APS approved or back to the vendor if not

4. authorized.

5. If the invoice is returned to APS it is matched up against the original P.O.-Pink. The PO and vendor invoice are then combined into a packet and prepared for the voucher process.

III. Voucher Initiation

1. APS receives the packet for vouchering. It begins this process by assigning a voucher number.

2. The Chief Accountant must approve vouchers > $5,000.

3. APS prepares another packet from the approved vouchers. This packet includes the P.O.-Pink, authorized invoice and approved voucher..

IV. Check Preparation

1. Typist receives the approved voucher packet and retrieves a numbered blank

2. check to pay the vendor.

3. Typist types a two-part check (blue,green) using data from the approved voucher and enters invoice number on the check stub.

4. APS files the approved packet with the Check-green in the permanent paid file.

5. The check is either picked up or mailed directly to the vendor.

Assignment

1. Provide the DFDs for the four events. Each event should be shown as a single DFD on a separate piece of paper.

2. Level each event to its functional primitives.

3. Develop the Process Specifications for each functional primitive DFD.

4. Create a PFD.

Mini-Project #3

Based on the mini-project from Chapter 3, the following information was obtained from Mr. Smith during the actual interview:

1. The hiring department forwards a copy of a new hire’s employment letter.

2. The copy of the hire letter is manually placed in a pending file, which has a date folder for every day of the month. Therefore, Mr. Smith’s department puts the letter in the folder for the actual day the new employee is supposed to report for work.

3. When the employee shows up, he/she must have the original hire letter.

4. The original and copy are matched. If there is no letter in the file, the hiring department is contacted for a copy.

5. The new employee is given a “New Employee Application” form.

The form has two sides. The first side requires information about the individual; the second side requests insurance-related information.

6. The employee is given an employee ID card and is sent to work.

7. The application form and letter are filed in the employee master cabinet.

8. A copy of side two of the application form is forwarded to the insurance company. The copy of the form is filed in an insurance pending file, using the same concept for follow-up as the new-hire pending file system.

9. The insurance company sends a confirmation of coverage to the department. At that time, the pending file copy is destroyed.

10. Based on the employee level, insurance applications are followed up with a call to the insurance company.

Assignment

Based on the above facts, create the DFDs that depict the system. Level the DFDs until they are at a functional primitive level.

Mini-Project #4

The following is the information obtained during an interview with the ABC Agency:

People or potential renters call the ABC Agency and ask them if apartments are available for rent. All callers have their information recorded and stored in an information folder. The agency examines the file and determines whether their database has any potential matches on the properties requested. People also call the agency to inform them that they have an apartment for rent. Fifteen days before rent is due, the agency sends invoices to renters. Sixty days before the lease is up, the agency sends a notice for renewal to the renter.

Assignment

1. Draw the DFDs.

2. Level DFDs to functional primitive.

3. Provide process specifications for each functional primitive DFD. Use at least three different process specification “styles.”

Comments

Post a Comment