Security

Security

Introduction

Analysts should be involved with the security of database and network systems. Security responsibility covers a number of areas. The primary involvement is in application security, which includes access security, data security, and functional screen security. Access security regulates who is authorized to use an application. Data security covers transaction data and stored data across the Web system, and how to validate and secure it. Functional screen security involves determining what features and functions are made available to which users within an application. In addition, the analyst must also participate in network design decisions and decisions about what hardware and operating system conventions should be implemented to help protect the systems from vulnerabilities in browsers, servers, protocols, and firewalls.

Obviously, Web systems need to provide security architectures similar to those of any network system. But Web services are designed somewhat differently from those of regular Internet networks. Specifically, Web systems require verification of who is ordering goods and paying for them and have an overall need to maintain confidentiality. Finally, the issue of availability and account- ability of Web systems to generate revenues and services to customers and consumers goes well beyond the general information services offered by traditional public Internet systems.

Figure 14.1 reflects the dramatic growth in Internet usage from its core beginnings in the early 1990s over a 10-year period. The result of this increase is that users will be downloading and executing programs from within their own Web browser applications. In many cases, users will not even be aware that they are receiving programs from another Web server.

Since the frequency of downloading software has risen, analysts need to ensure that applications operating over the Internet are secure and not vulnerable to problems associated with automatic installation of active content programs. Furthermore, analysts need to participate in deciding whether the Web system will provide active content to its users. This means that user systems, especially external ones, may have safeguards against downloading active contents on their respective network system. Thus, Web applications that rely on downloaded

animation and other configuration software may not be compatible across all user environments—certainly a problem for consumer-based Web systems.

Examples of Web Security Needs

This section presents some examples to demonstrate the crucial importance of verification, confidentiality, accountability, and availability in application security. Suppose a company is engaged in the process of selling goods. In this scenario, a consumer will come to the Web site and examine the goods being offered, eventually deciding to purchase a product offering. The consumer will order the product by submitting a credit card number. Below are the security requirements for the applications.

• Confidentiality: The application must ensure that the credit card number is kept confidential.

• Verification and Accountability: The system will need to ensure that the correct goods and process are charged to the consumer. Furthermore, the Web system must validate that the correct goods are shipped within the time frame requested on the order (if one is provided).

A second example of Web security entails a company providing information on books and periodicals. The information is shared with customers who subscribe to the service.

• Confidentiality: In this example there is no credit card, but customer information must be kept confidential because customers need to sign on to the system.

• Verification and Accountability: The system needs to ensure that the correct information is returned to the customer based on the query for data. The system must also provide authentication and identification of the customer who is attempting to use the system.

Availability

Availability is at the heart of security issues for Web systems. Plain and simple: if the site is not available there is no business. Web systems that are not available begin to impact consumer and customer confidence, which eventually hurts business performance. While a failure in the other components of security mentioned above could certainly affect user confidence, none has greater impact than a Web site that is not working. Indeed, there have been a number of books and studies that suggest that user loyalty does not last long in the cyber community, especially when Web sites are not available (Reid-Smith, 2000). Therefore, availability of the Web system is deemed a component of security responsibility. The first step in ensuring availability is to understand exactly what expectations the user community has for the site’s availability. Recall that the user community consists of three types of users: internal users, customers, and consumers.

The first user to discuss is the consumer, generally the user over whom we have the least control and of whom we have the least knowledge. There are two issues of availability that must be addressed with consumers:

1. Service: analysts need to know how often consumers expect the service to be available. It is easy to assume “all the time,” but this may not be practical for many sites. For example, banks often have certain hours during which on-line banking is not available because the file systems are being updated. In such a case, it is the users’ expectations that should receive the analyst’s attention. It matters little what the organization feels is a fair downtime in service; analysts must respond to the requirements of the user base. Just having the Web site available is only one part of the system. Analysts must be aware that all of the other components will also need to be available to assist in the complete processing of the order.

2. The issue of availability is linked to order fulfillment, at least in the minds of customers. Failure to have product available can quickly erode consumer and client confidence. For example, the industry has already seen the demise of some on-line companies that failed to fulfill orders at various times, especially Christmas. Once a company earns a reputation for not fulfilling orders, that reputation can be difficult to change.

The second user to discuss is the customer. The model for customers or business- to-business (B2B) is somewhat different than consumers. As stated previously, customers are a more “controlled” user than a consumer, in that they typically have a preexisting relationship with the business. Because of this relationship, issues of availability might be more stringent, but are also more clearly defined. In this kind of situation, the marketing group or support organization of the Web company is likely to know the customer’s requirements rather than having to derive them from marketing trends. An example of special B2B customers needs occurs when a customer needs to order goods at different times throughout the day, as opposed to ordering them at fixed intervals. This type of on-demand ordering is known as “Just-in-Time,” which is a standard inventory model for the manufacturing industry. Should this type of availability be required, it is crucial that the Web site not fail at any time during operation because of the unpredictability of order requests.

The third users are internal. Internal users are yet another group that depends on the Web system. The importance of this group, because they are internal and in a somewhat controlled environment, can be overlooked. However, internal users are an important constituency. Remember that internal users are those who process or “fulfill” the orders. Today, more than ever, internal users need remote access to provide the 24-hour operation that most external users demand. The access required by internal users, then, must mirror the access needed by the firm’s customers and consumers. Such access is usually provided through corporate Intranets that are accessible via the Web. In this case, protecting against outside interference is critical, especially since Intranet users have access to more sensitive data and programs than typical external users.

In the end, Web systems must satisfy the user’s comfort zone—a zone that becomes more demanding as competition over the Internet continues to stiffen. Furthermore, Web systems are governed by the concept of global time, meaning that the system never is out of operation and it must take into account the multiple time zones in which it operates. This concern can be especially important with respect to peak-time processing planning. Peak-time processing is required during the time in which most activity occurs in a business or in a market. So, for example, if most manufacturers process their orders at 4:00, Web systems need to handle 4:00 all over the world!

All of these issues demonstrate the significance of Web availability. Given that I define availability as a component of security, it falls into our analysis of how the analyst needs to integrate its requirements during the engineering process. It is important to recognize the severity and cost of downtime. Maiwald (2001) measures the cost of Web downtime by taking the average number of transactions over a period of time and comparing it against the revenue generated by the average transaction. However, this method may not identify the total cost because there may be customers who do not even get on the Web site as a result of bad publicity from other users. Whatever the exact cost of the system downtime is, most professionals agree that it is very high and a risky problem for the future of the business.

Web Application Security

If Web systems are to be secure, the analyst must start by establishing a method of creating application security. Because Web systems are built under the auspices of object-oriented development, software applications are often referred to as software components. These software components can reside in a number of different places on the network and provide different services as follows:

• Web Client Software

• Data Transactions

• Web Server Software

Each of these major components and their associated security responsibilities will be discussed in greater detail in this chapter with the ultimate intent to provide analysts with an approach to generating effective security architecture requirements.

Web Client Software Security

Communications security for Web applications covers the security of information that is sent between the user’s computer (client) and the Web server. This information might include sensitive data such as credit card data, or confidential data that is sent in a file format. Most important, however, is the authentication of what is being sent and the ability of the applications to protect against malicious data that can hurt the system.

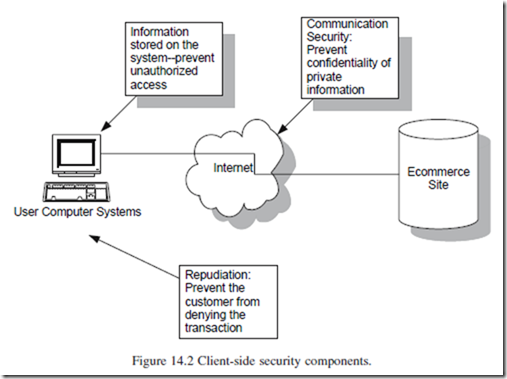

The advent of executable content applications that are embedded in Web pages has created many security risks. These executable components allow programs to be dynamically loaded and run on a local workstation or client computer. Executable content, which is sometimes called active content, can exist in many forms. ActiveX by Microsoft and Java applets are examples of object component programs that can be downloaded from the Internet and executed from within a Web browser. There are also scripting languages such as JavaScript and VBScript which are run-time programs that are often sent from other Web sites that allow for certain functionalities to be dynamically added during a Web browser session. Finally, many files that are traditionally considered data, such as images, can also be classified as an executable component because they are used as plug-ins. Plug-ins can easily be integrated with a Web browser to give it more functionality. Figure 14.2 reflects the components of client-side security.

Web applications today also make use of “push” and “pull” technologies. Push technology is a way of minimizing user efforts to obtain data and applications by automatically sending it to a client from a server operation. Pull technology is somewhat opposite in that it allows users to surf the Web from their client machine and to retrieve the data application that they want to use.

The fact that the Web is open to interaction with the Internet provides both benefits and problems. The security problems with downloaded software fall into two areas: (1) authenticity of content and (2) virus security risks. Specifically, users of push and pull technology are authorizing these applications to be downloaded and written to a client local computer. Providing this function allows content that may not have been authenticated (in terms of data credibility) and opens the door for violations of security and privacy.

Because Web sessions do not by themselves retain information on prior executions, they need to contain an “agent” that holds information from past interactions. This agent is called a “cookie.” Cookies are stored on the client computer and typically collect Web site usage information. This includes information about whether the user has visited a site before as well as what activities they performed while they were in the site. Unfortunately, cookies pose privacy and security problems for users. First, the collection of personal information might constitute a breach of personal privacy. Second, the residence of a cookie on the local drive can allow files to be read and written to it beyond its intended purpose. This opens the door for virus propagation.

Authentication: A Way of Establishing Trust in Software Components Authentication provides a means of dealing with illegal intrusion or untrustworthy software. When dealing with authentication, the analyst must focus on the type of middleware component architecture being used. For example, Microsoft uses a technology called “Authenticode,” which is designed to help thwart malicious code from executing inside a Windows application. This is accomplished by assigning a code that is verified by the sending application and the receiving application. This “code” is sometimes called a digital signature and represents an endorsement of the code for valid use. Thus, authentication is gained by validating the data’s validity. Authenticode can provide two methods of checking ActiveX controls:

1. Verification of who signs the code; and

2. Verification of whether the code has been altered since it was signed.

These verifications are necessary because ActiveX controls have the ability to run on a workstation like any other program. Thus, a malicious ActiveX control could forge email, monitor Web page usage, and write damaging data or programs to the user’s local machine. Authenticode essentially deals with ensuring that both sides of the transaction are in sync. Before a code can be assigned, each participant in the transaction (the downloading of the ActiveX control from the sender to the receiver) must apply for and receive a Software Publisher Certificate (SPC) from a particular Central Authority (CA) like VeriSign, who issues valid digital signatures. Essentially, the role of the CA is to bridge the trust gap between the end user and the software publisher.

Once the software publisher has created a valid signature, the end user can verify the identity of the publisher and the integrity of the component when it is downloaded. This is accomplished by the browser, which detaches the signature code from the software and performs the necessary checks using Microsoft’s Authenticode product. In order to accomplish this, the browser goes through a two-step process. First, it verifies that the SPC has been signed by a valid CA. Second, it uses the public keys provided with the original authentication to verify that the message was signed by the software publisher and thus verifying that it was sent from them. Figure 14.3 shows a sample SPC.

Authenticode also determines whether the active content was altered in any way during transit so that it also offers protection against any tinkering during the transport of the data from sender to receiver.

However, as with most software applications, analysts need to be aware of Authenticode’s shortcomings. For example, Authenticode does not ensure that simply because an ActiveX control is signed it has not been maliciously tampered with. That is, the process of security is based on a “trust” model. Therefore, the user who checks signatures may get a false sense of security that the code is secure. Furthermore, since ActiveX controls can communicate across distributed Web systems, they can be manipulated during transit, without an

Another default scenario is using the security options available in Windows. If the active content is enabled, a dialog box will be displayed each time an ActiveX control is downloaded. The user will have the ability to choose whether or not they will enable or authorize an ActiveX control to be stored. Some developers call this option “making security decisions on the fly.” Unfortunately, this is a rather haphazard way of protecting client-side security. Users are a dangerous group to allow this type of decision-making power, and in many ways, it is an unfair way of making the user responsible instead of the system.

Another important issue that the analyst must be aware of is incompatibilities between Microsoft’s ActiveX and Java, particularly as they relate to authentication handling. The essential security difference between the two is that ActiveX is based wholly on the trust of the code signer, whereas a Java applet security is based on restricting the actual behavior of the applet itself. This difference further complicates the process of establishing a consistent security policy. For this reason, many analysts prefer to use Java because of its true multi-platform compatibility; it is simply easier to create standard security policies for this reason (remember that ActiveX is supported only on Windows-based systems). Furthermore, from a programmer’s perspective, Java offers true object-oriented programming, rather than those that have object extensions to a third-generation procedural language.

The security model used with Java applets is called Java Sandbox. The sandbox prevents “untrusted” Java applets from accessing sensitive system resources. It is important to note that the sandbox applies only to Web-based Java programs or Java applets, as opposed to Java applications, which have access to unrestricted program resources. Another way of separating Java versions is to focus only on Java applets for Web development. The Java “sandbox” name came from the concept of defining the area where the Java applet is allowed to “play” but not escape. This means that the functionality of the Java applet can be restricted to certain behaviors like just reading, or just writing information within a specific network environment. The Java sandbox is implemented or enforced by three technologies: the bytecode verifier, the applet class loader, and the security manager (McGraw & Felton, 1996). The three technologies must work together to restrict the privileges of any Java applet. Unfortunately, the design of the sandbox must be complete—any breaks in coding will cause the entire sandbox to malfunction. Therefore, the analyst must be careful in the design and testing of the sandbox functions. Ultimately, the selection of Java and the standards created to support its implementation is a prime directive of the analyst.

Risks Associated with Push Technology and Active Channels

This section focuses on active content that can be embedded in applications without the awareness of users. Such applications include the use of JavaScript, a run-time version of Java that downloads itself onto a client computer. This section also discusses the challenges of working with plug-ins, viewing graphic files, and executing e-mail attachments. The objective is to not only have secure Web systems protected from outside scrutiny, but also to determine what types of active content should be offered to users, both internal and external, as part of the features and functions designed into the Web application and overall architecture of the system. Remember that security issues go both ways; one from the perspective of the Web system and the other from the user’s view. Analysts must always be aware of what other systems will do to check against the active contents that the Web system may wish to use.

JavaScript

JavaScript is a scripting language that can be distributed over the Web using the client/server paradigm. If JavaScript is embedded in the browser, as it is in Microsoft’s Internet Explorer, the user’s browser will automatically download and execute the JavaScript unless the user specifically turns the option off. JavaScript is typically used to enhance the appearance of the browser interface and Web page. JavaScript is also used to check data validity, especially data that is submitted through Web browser forms. What makes JavaScript so dangerous is that a hardware firewall cannot help once the file is downloaded for execution. Therefore, once the script is loaded, it can do damage both inside the Web system as well as to the client workstation. Obviously the safest form of action is to disable JavaScript from executing on the browser, but tha,t in effect, stops its use completely. Thus, analysts need to know that using JavaScript to deliver applications to their clients may be problematic because the client’s machine may not accept it.

Plug-Ins and Graphic Files

Plug-ins are special applications that are integrated into a specific Web browser. The purpose of the plug-in is to allow the browser to support a certain type of program that will be downloaded to the client workstation. Typically, plug-ins are first downloaded and then executed so the application can embed itself into the Web browser. This usually means that once the plug-in is installed, it does not need to be reinstalled each time the browser is executed. An example of a plug-in is RealPlayer, which is a tool that supports streaming video over the Internet (see Figure 14.4). Very often if a user wants to view a streaming video file over the Web, the application will automatically scan the workstation to determine whether the plug-in software exists. If it does not, the user will be given the option to download it during the session so that a streaming file can be viewed. Regardless of whether the plug-in is pre-installed or dynamically provided, the user opens the door to potential viruses that can be embedded in the code.

One of the better-known plug-in vulnerabilities is a program called Shockwave, which is a program that allows for the downloading of movies played over the Internet. There is a flaw that allows a Shockwave file to get into the Netscape Mailer and thus invade e-mail accounts. There are no known ways of preventing this, other than of course fixing it once it occurs. The prevention of download files that could contain malicious programs within a plug-in depends highly on what type of plug-in is being used. The situation with Shockwave involves greater risk because the data is coming through a more open environment where hackers can modify files or create phony ones. On the other hand, Shockwave could be used to show a film about the company and could be well protected from an intruder, depending on how the server is secured (for

example a firewall). Like most issues with security, the idea is to make illegal break-in so difficult that it appears impossible.

Attachments

There are a number of cases where Web systems allow attachments to be forwarded and received from users. These are often e-mail attachments because they are the easiest and cheapest way to transmit messages and files. Loading e-mail attachments becomes more dangerous to users when there are Web pages attached rather than just a file attachment. The risk then is not on the delivery, but rather against those who send attachments into the Web system. The degree of risk exposure can be correlated to the relationship with the user (this of course assumes that the user is intentionally sending a malicious e-mail attachment). If the user is known, as in an internal user or customer, then the degree of exposure in allowing upload attachments is less that if it is a consumer. While users are allowed to send responses, it is probably not wise to allow them to upload anything beyond that. Furthermore, analysts can build in scanning of uploaded software that can detect a virus; however, as we have seen, there can be embedded programs that cannot be easily detected. While dealing with attachments does not seem as serious as the other components that have been examined in this section, analysts attempting to design elaborate e-mail interfaces should be aware of the potential exposure to malicious acts.

This section covered security over the client part of the Web system. The security issues relate to both the author of the system, which entailed protecting the Web system from receiving malicious software from a client, and the spreading of problem software to users, the more relevant problem associated with Web systems. In summary, the most important issues for the analyst to understand are:

1. How internal users might obtain malicious software within the network domain.

2. How users through the Web software might return something inadvertently.

3. The challenge of what technology application technologies have to offer to users that might not be accepted through their standard configurations (like turning off JavaScript).

4. What dangers there are to providing active content because the system might become infected and thus be a carrier of viruses and other damaging software to its most valued entity, its external users.

Securing Data Transactions

This section focuses on security issues related to protecting the data transaction. The data transaction is perhaps the most important component of the Web system. Data transaction protection is implemented using various protocols such as encryption and authentication. Furthermore, there are protocols that operate only on certain types of transactions, such as payments. The issue of securing data transactions is complex, and this section will provide the types of protocols available and how analysts can choose the most appropriate data security protocol to fit the needs of the application they are designing.

Much of the data transaction concern in Web systems is focused on the need to secure payment transactions. There are two basic types of data trans- action systems used for Web technology: stored account and stored value. Stored account systems are designed in the same way that electronic payment systems handle debit and credit card transactions. Stored account payment systems designed for the Web really represent a new way of accessing banks to move funds electronically over the Internet. On the other hand, stored value systems use what is known as bearer certificates, which emulate hard cash transactions. These systems use smart cards for transferring cash between parties. Stored value systems replace cash with an electronic equivalent called “e-cash.” This involves transferring a unit of money between two parties without authorization from a bank. While both systems offer the ability to facilitate the Web, the decision about which one to use is often based on the cheapest (cost per transaction) method. Figure 14.5 shows a comparison of transaction costs for electronic systems.

However, it is clear that stored value systems are much riskier because an intermediate bank is not involved in securing the transaction. Stored value

systems, as a result of this lack of security, are usually used for smaller-valued transactions such as purchasing candy from a vending machine.

Before designing payment transaction systems, the analyst must recognize which protocols can be used to secure Web-based transactions. The most popular protocol is Secure Sockets Layer (SSL). SSL does not actually handle the payment but rather provides confidentiality during the Web session and authentication to both Web servers and clients. Secure HTTP is another less widely used protocol that wraps the transaction in a secure digital envelope. Both protocols use cryptology to provide confidentiality, data integrity, and non-repudiation (no denial) of the transactions. Because the Internet is inherently an insecure channel, the processing of important data transactions that require protection should be sent via secured channels. A secured channel is one that is not open to others while a message of data is traveling from the originator or source to the desti- nation or sink. Unfortunately, the Internet was not designed to provide this level of security. Data is sent across the Internet using a transferring protocol called TCP/IP. TCP stands for Transport Control Protocol, which runs on top of the IP or Internet Protocol. SSL is used to provide security to a TCP/IP transaction by providing end-to-end encryption of the data that is sent between the client and the server. Encryption is the process of scrambling the data using a sophisticated algorithm so that the data cannot be interpreted even if it is copied during a transmission. The originator of the message encrypts it, and the receiver decrypts the message using the same algorithm. Thus SSL is added to the protocol stack with TCP/IP to secure messages against theft while the transaction is in transit. The protocol stack with TCP/IP and SSL are shown in Figure 14.6.

The analyst needs to be aware that some form of transaction security needs to be implemented as part of Web systems design. Unlike many other system components, security software for data transaction systems is usually provided through third-party software vendors. Currently, there are three major vendors that supply transaction systems: First Virtual, Cyber Cash’s Secure Internet Payment System, and Secure Electronic Transaction (SET).

First Virtual is based on an exchange of e-mail messages and the honesty of the customer. There is no cryptography used by the firm. Essentially, a consumer must first get an account with FirstVirtual, usually secured by a credit card. The handling of the transaction is thus bartered through First Virtual as an outsourced third party doing a service for the Web system. The most attractive feature of using a service like this is its simplicity, and there is no special software that needs to be purchased by the user. First Virtual has some built-in software to monitor any abuses of the system.

Cyber Cash’s Secure Internet Payment system does use cryptography to protect the transaction data. Cyber Cash provides a protocol similar to SSL to secure purchases over the Web. The system contains a back-end credit card architecture that protects confidentiality and authenticity of the user, who in many cases is a merchant. Figure 14.7 outlines the six steps in securing an Internet payment.The steps for this type of transaction security are described below:

1. A consumer selects a product and an invoice is sent from the merchant to the consumer.

2. The consumer accepts the invoice and the browser sends the payment information encrypted with a protection key from the third-party vendor.

3. The merchant processes the transaction and forwards it to the vendor for decryption.

4. The payment is decrypted by the vendor (like CyberCash), who verifies the information through authentication procedures. The payment is brokered by the vendor with the bank.

5. The bank requests authorization from the credit card company.

6. Payment IDs are forwarded to the merchant.

It is important to note that the processing of transactions made between the bank and credit card company uses private secured networks, which also strengthens the protection of the payment. In summary, this method provides an excellent way to secure purchases of goods through Web transactions that rely on multiple

parties (merchants, banks, and credit cards). It does this by ensuring that all parties get paid and that confidentiality is maintained.

Secure Electronic Transaction (SET) is an open standard that has received support from many industry credit card associations like VISA and MasterCard. SET was also developed in cooperation with GTE, IBM, Microsoft, Netscape, and Verisign. SET is not as complex as the CyberCash model; it can be implemented over the Web or via e-mail. SET does not attempt to secure the order information, only the payment information. Payment security is handled using cryptography algorithms. Figure 14.8 specifies the 10 steps in a SEC process.

The 10 steps are described below:

1. Consumer sends the request for transaction to merchant.

2. Merchant acknowledges the request.

3. Consumer digitally signs a message and the credit card is encrypted.

4. The merchant sends the purchase amount to be approved along with the credit card number.

5. The transaction is approved or rejected.

6. The merchant confirms the purchase with the consumer.

Stored Value Payment Systems

Stored value systems replace currency with the digital equivalent and thus have the ability to preserve the privacy of traditional cash-based transactions while taking advantage of the power of electronic systems. The major downside of the stored value model is that it requires identification of the buyer and seller, which in some instances is not what both parties want. By contrast, e-cash systems are stored in an electronic device called a hardware token. The token is used to hold a predetermined and authorized amount of money. The related bank account of the consumer is automatically debited and the token is incremented with the same value. This process allows the payment transaction to occur more in a real-time mode than in the stored account model. Although the stored value method supports online transactions, it can also be used offline if the consumer prefers. Offline transactions are more secure because they are less traceable.

The stored value model is not as secure as the stored account system. The main reason is that there is less audit trail of the transaction. This is expected since there are fewer checks and balances in the process. Furthermore, the transactions themselves are less secure because a token can be tampered with, thus changing its value in the system. Electronic cash can be represented in a hardware device or in a coin that is encrypted. In a hardware device, the prepaid amount is resident and is decreased every time there is an authorized payment. Hardware tokens are usually valid for a limited time period because they are more difficult to update. Yet another method is called “smart cards.” These are similar to hardware tokens in that they contain a prepaid amount of money on a physical device; in this case a card. Figure 14.9 provides an example of an e-cash transaction using the stored value method.

Securing data transactions, especially those used for payment systems, is typically handled through third-party vendor software and systems. Web systems,

therefore, will need to interface with them, unless the analyst deems that it is more advantageous to design and support an internal proprietary system. Overall, it seems easier to integrate a Web system with a third-party payment security system than to create a proprietary system. If the stored value method is used, then analysts must consider how these hardware devices, cards, and software tokens will be issued and updated. These requirements will need to be part of the technical specifications provided to the development team. Obviously securing data transactions may also require that vendors be interviewed to determine which of their products and services best fit the needs of the Web system being built.

Securing the Web Server

The security issues governing the Web server are multifaceted. The security components include the Web server software, the databases, and the applications that reside on them. Thet also include a middle layer that provides communication between the tiered servers.

Web Server

The Web server software can include applications, mail, FTP (File Transfer Protocol), news, and remote login, as well as the operating system itself. Since most Web systems are server centric, meaning that most of the application software is resident on the server and downloaded to the workstation per execution, the security of the server side is integral to the ongoing dependability of the system. As can be expected, the complexity of both the server software and its configurations can be a huge area of vulnerability.

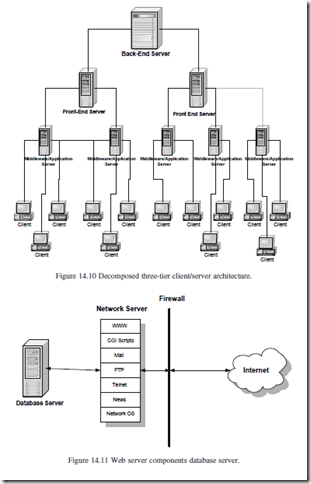

The Web server can be decomposed into three components: front-end server, back-end server, and middleware interface software. In many ways the Web server architecture has its own client/server infrastructure where there are higher- end servers that provide information to lower-end servers. The middle tier acts as a communication buffer between the back-end and front-end servers similar to the structure in a three-tier architecture. Figure 14.10 represents a more decomposed architecture of the three-tier client/server design.

From a security perspective, any one of these components that receive malicious events could result in problems with integrity and confidentiality. The best way to deal with security problems is first to understand where the system is most vulnerable. That is, once you know where the exposure is, then an “anti-exposure” solution can be designed. This section examines each decom- posed component and establishes the type of security needed to maximize its dependability and confidentiality. The analyst must be involved as a driver of the process because of the amount of application and data integrity software that must be integrated into the design of the overall system. Figure 14.11 depicts the decomposed server components.

Database security involves protection against unauthorized access to sensitive data. This data could be confidential client data, such as account information

and payment history. Database security usually correlates to hardware security because most break-ins occur through hardware server penetration. However, because SQL provides the method of accessing databases from external applications, there must be security against its use. The problem with SQL is that once you can access the data it is difficult to stop the spread of any malicious acts. This means that it is more difficult to limit access once it has been granted. While it is easy to limit access to data through custom applications that require sign-on, it is much more difficult to implement against direct SQL query. It is unattractive to take away the use of direct query altogether, since this is such a powerful tool for analyzing data without the need for senior programmers. Furthermore, databases provide an unauthorized user with the ability to access data without the use of the underlying operating system. Therefore, to secure databases properly, the operating system logs and database logs should be regularly examined. For the analyst to determine how to maximize database server security, he/she must know the following information:

• The location of the database server

• How the database server communicates with the Web server

• How the database server is protected from internal users

Location of the Database Server

The physical location of the system can provide some added protection against illegal access of the data. The best location for the database server is in the organization’s central network system. Since the database should never be accessed directly by external users, there is no reason that the database server should be connected directly to the Internet. It is important to clarify this point. I am not saying that the database should be accessible from the Internet, rather I am merely saying that external access should be obtainable only through authorized application programs. Thus, direct SQL query should not be allowed. In some instances, because of the level of sensitivity of the data, the server is actually placed in an even more secure location, and protected further with an internal firewall.

Communication with the Web Server

Inevitably, the database server must communicate with the application server so that transactions can be processed. In most well-secured environments, the database server will initiate the connection, but in reality it cannot always operate in this sequence. Thus, eventually the application server will need to initiate connection to the database server using SQL. In these situations, it will be necessary for the application server to store the User ID and password to gain access to the database. This ID and password will need to be embedded in a program or file on the system, which will need to be determined during the design of the system by the analyst. Unfortunately, anything stored in a computer system can be discovered by an unauthorized intruder, especially those who are aware of the architecture of the system. A solution of sorts is to limit every password to only one part of the transaction: for example, if one password allowed access but another was required for retrieval. At least this solution would make it harder to obtain a complete sequence of codes. Furthermore, the distribution of codes could also be kept in separate data files and matched using a special algorithm that would calculate the matched key sequences to complete a database transaction. While this approach sounds convincing, there are some drawbacks. First, the distribution of security levels would create the need for many IDs and passwords in the system. Users may find it difficult to remember multiple IDs and passwords. Second, the distribution of codes will inevitably begin to affect performance. Third, matching files always has the risk that indexed data will become out of sync, causing major re-indexing to occur possibly during a peak production cycle.

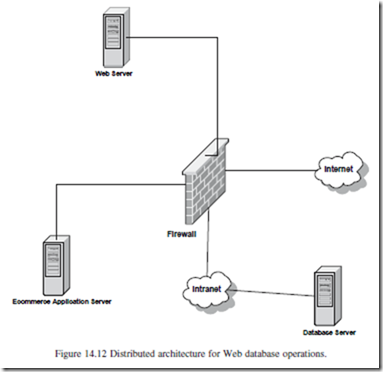

Another approach to reducing database exposure is to divide the functionalities between the database server and the application server. This can be accomplished by simply reducing the roles of each component, yet requiring all of them to exist for the successful completion of a database operation. This is shown in Figure 14.12.

Internal User Protection

While many systems focus on preventing illegal access by external users, they fall short of providing similar securities against internal users. The solution to this problem is rather simple, in that the same procedures should be followed for both internal and external users. Sometimes there are databases that are available only to internal employees, such as those that contain human resource benefit information. These databases should be separated from the central network and separate IDs and passwords should be required. Furthermore, as is practiced by many IT managers, passwords need to be changed on a periodic basis. Finally, the organization must have procedures to ensure that new users are signed up properly and that terminated employees are removed from access authorization.

Application Server

The application server provides the entrance for most users to the system. Indeed, the applications provide not only the functionality of the system but also the access to its data. The first component of application security is user authentication. User authentication is a way to validate users and to determine the extent of functionality that they are authorized to use in the system. Furthermore, sophisticated authentication systems can determine within an application what CRUD activities (Create transactions, Read-only existing data, Update existing data transactions, and Delete transactions) a user is authorized to perform. Further

security can actually be attained within an application Web page, in which only certain functions are available depending on the ID and password entered by the user.Most companies use the following mechanisms to restrict access to Web applications:

• Client hostname and IP address security

• User and password authentication

• Digital certificates

Client Hostname and IP Address Security

This is one of the most basic forms of Web page security. It is accomplished by having the Web server restrict access to certain or all Web pages based on a specific client hostname or range of IP addresses. This process is usually straightforward because a Web request to a document residing on a host server must include the hostname of the client and its IP address. Thus, the server can use this information to verify that the request has come from an authorized requesting site. This type of security simply establishes a way of blocking access to a specific or group of Web pages. The Web server can also use the Domain Name Service (DNS) to check to see if the hostname that was sent actually agrees with the IP address sent. Figure 14.13 shows the process of hostname and IP address authentication.

User and Password Authentication

User authentication is essentially a process whereby an application verifies the validity or identification of the requestor. This is accomplished using some user identification and password—so it is a two-tiered security authentication. To set up an ID and password system, the analyst must first design a database to hold the names, IDs, and passwords of its valid users. Furthermore, there need to be additional fields that will store information about the level of the user ID. This means that a coding system must be designed so that the lookup tables can store codes that identify the specific capabilities of the requestor. The analyst must also design an application program that allows for the maintenance of all user security databases so that IDs can be added, deleted, or changed. In most database servers, the authentication file is designed using a flat-file architecture. Figure 14.14 provides the architecture flow of user authentication.

It is important to note that analysts can provide “nested” authentication tables within a Web system. This means that there are multiple tables, or ones that are more dimensional, that list all of the security levels that the user is able to perform. In Unix, this type of security is implemented with a “user profile” table that holds all of the information in one file. This file is usually formatted as a matrix or two-dimensional table. Each column refers to a specific enabling (or restricting) access capability. The user authentication design is geared toward

providing easier maintenance of the user’s security levels. It also assumes that the security settings will be maintained by one unit rather than by many different units. For example, if a password is needed for a specific operation, it is handled by a central security operation as opposed to having each department have a separate authentication individual and system. Figure 14.15 shows a typical user profile security system.

Digital Certificates

Most of the security measures surrounding applications have been based on access control. Using access control to secure application security has a major flaw: the data holding the ID and password can be seen over the Internet— meaning that during transition they can be illegally copied. A higher level of security can be attained by using a digital certificate, which requires that both parties are involved with authenticating the transaction. This was discussed earlier in the section on SSL. Essentially, digital certificates contain the necessary

ID and password in an encrypted state, so it does not matter whether the trans- action is seen or copied during transit. The receiver of the digital certificate has the decrypt software to unravel the coded message.

Summary

This section provided an overview of the methods of securing the Web server. The model is complex because each component has its own security issues, while still needing to operate together to strengthen effectiveness across the Web operation. This section also identified the different types of security software available and suggested where the analyst needed to be involved in the process of determining what third-party products were necessary and how authentication should be implemented.

Web Network Security

Most of this chapter has focused on the application side of security and how the analyst needed to participate and design a Web system that could be reliable, perform, and have a high degree of integrity and confidentiality. This part of the chapter discusses the network architecture in terms of its impact on security issues. This section will outline the role the analyst and designer should play to ensure that the infrastructure is maximized to support the application design. Indeed, the system network should not be completed without the participation of software engineers.

The DMZ

The DMZ or the “demilitarized zone” is commonly understood as a portion of the network that is not completely trusted. The job of analysts should be to reduce the number of DMZs that exist in the system. DMZs often appear between network components and firewalls. DMZs are constructed to place certain systems that are accessed by external users away from those that are for internal users. Thus, the strategy is to partition the Web network to focus on security issues inside the DMZ, which is always used for external users. Internal users are behind the firewall in a much more controlled environment. Figure 14.16 depicts the layout of the DMZ with the rest of the network.

Common network components that should be in the DMZ and receive different security attention are as follows:

• Mail: There should be both an internal and an external mail server. The external mail server is used to receive inbound mail and to forward outbound mail. Both inbound and outbound mail eventually are handled by the internal mail server, which actually processes the mail. The external mail server then really acts as a testing repository against emails that might damage the central system.

• Web: The DMZ has publicly accessible Web sites. Many public Web servers offer places where external users can add content without the fear of hurting the central Web system. These types of servers can allow certain interactive chat room activities to transpire.

• Control Systems: These include external DNS servers, which must be accessible to queries from external users. Most DNS servers are replicated inside the firewall in the central network.

Firewalls

A firewall is a device that restricts access and validates transactions before they physically reach the central components of the Web system. Maiwald (2001) defines a firewall as “a network access control device that is designed to deny all traffic except that which is explicitly allowed” (p. 152). A firewall then is really a buffer system. For example, all database servers should contain a firewall that buffers them from unauthorized access and potential malicious acts. Firewalls should not be confused with routers, which are network devices that simply route traffic as fast as possible to a predefined destination.

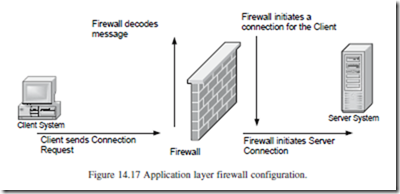

There are two general types of firewalls: application layer firewalls and packet filtering firewalls. Application layer firewalls are also called proxy firewalls. These firewalls are software packages that are installed on top of the resident operating system (Windows 2000, UNIX) and act to protect the servers from problem transactions. They do so by examining all transactions and comparing them against their internal policy rules. Policy rules are enforced by proxies, which are individual rules governing each protocol rule. Therefore, one protocol has one proxy. In an application firewall design, all connections must terminate at the firewall, meaning that there are no direct connections to any back-end architecture. Figure 14.17 shows the application layer firewall connections.

Packet filtering firewalls can also be software packages that run on top of operating systems. This type of firewall, unlike application ones, can connect to many interfaces or networks. This means that many packets of data are arriving from different sources. Like the application layer, the packet system also uses proxies to validate the incoming transactions. The major difference between the two firewalls is that packet firewalls do not terminate at the firewall as they do in the application layer design. Instead, transaction packets travel directly to the destination system. As the packet arrives at the firewall, it is examined and either rejected or passed through. It is something like a customs line, where those entering the country go through a line and are either stopped or sent through into the country of arrival. Figure 14.18 reflects the packet firewall method.

Summary

While network hardware security is somewhat outside the responsibilities of the analyst, the knowledge of how the DMZ and firewall systems operate provides important information that the analyst must be aware of. Knowing what is inside and outside the firewall is crucial for both network designers and software architects. Indeed, knowing where to place the software and how best to secure it in a complex network environment are integrated decisions that require input from both parties.

Database Security and Change Control

Database security and change control relate to the database access capabilities granted to a particular user or group of users. Limited access is always a security or control feature designed either to protect database integrity or to limit what some users are allowed to do with data.

Levels of Access

Who has the ability to access a database is an important responsibility of the analyst/designer. Access at the database level is implemented using a privilege that is created using special coding. A privilege is defined as the ability that a user has to execute an SQL (Structured Query Language) statement. In a database product like Oracle, a user can have two types of privileges: system and object. System privileges can be voluminous. In Oracle alone there are over 157 system-level privileges. An object privilege relates to how a user can access a particular database table or view. For example, a user must have an object privilege to access another user’s database system or schema.

Object privileges are coded using commands like SELECT, INSERT, UPDATE, and DELETE, that is, each of these commands can have special rights limiting or granting a user access to the database. For example, a privilege may allow a user to select a database record for viewing, but not with access rights to delete, add, or modify a record. Such privilege rights need to be determined during analysis and conveyed to the development team.

The specifications for object privileges are no different than a program requirement but are usually conveyed through a table matrix as shown in Figure 14.19:

Roles

A role is effectively a group of privileges that is granted to run an application. An application can contain a number of roles, which in turn grant a specific group of privileges that allow more or less capabilities while running an application program. Thus, the role is a set of capabilities that can be granted to a user or group of users. For example:

1. Create Nurses role.

2. Grant all privileges required by the Nurses application to the Nurses role.

3. Grant Nurses role to all Nursing assistants or to a role named Nurses_Assistants.

The advantages of this are multiple:

1. It is easier to grant a role, rather than each privilege separately.

2. You can modify a privilege associated with an application by modifying just the privilege with that role without needing to go into each user’s privileges.

3. You can easily review a user’s privileges by reviewing the role.

4. You can determine which users have privileges as an application using Role level query capabilities in the data dictionary.

Who Is in Charge of Database Security?

Once again, most of the security issues relating to analysis and design surround the database. For small implementations, the database administrator is usually responsible for security also. However, in larger more complex organizations with multiple integrated databases, security can be assigned to a separate role called the Security Administrator. The Security Administrator or whoever is responsible for database security needs to develop a security policy, which must then be abided in the analysis and design phases of software development.

The Security Administrator must focus on three aspects of security issues, which in turn become part of the analysis/design specification:

1. Data Encryption: Encryption applications are programs that use algorithms to change data so that it cannot be interpreted when it is traveling between applications. Most encryption occurs outside of the system because that is where such data is vulnerable. Thus, the Internet has become the most encrypted area of data that travels through cyberspace and must be protected against unauthorized access. The question becomes: who is responsible for identifying at what points data encryption is necessary? Again there are obvious points such as a terminal accessing a host over the Internet. But what of encryption between an application client and the database and the database and the application server as shown in Figure 14.20?

While the database analyst/designer is not responsible for encryption per se, it is worthwhile for him/her to be familiar with three encryption alternatives:

RC4: This uses a secret, randomly generated key unique to each session. This is an effective encryption for SQL statements, and stored procedure calls and results.

DES: Oracle uses this encryption known as the U.S. Data Encryption Standard Algorithm (DES) using a standard encryption that is useful for environments that might require backward compatibility with earlier, less robust encryption algorithms.

Triple-DES: Provides a high degree of message security, but with a performance penalty that may take up to three times as long to encrypt a data block than the standard DES algorithm.

2. Strong Authentication: allows identification of the user. This is a critical issue to ensure confidence in network security. Passwords are the most common method of authentication. This requires a separate third-party authentication server which sends requests to the database as depicted in Figure 14.21.

Since authentication will be integrated with the database software, the analyst needs to be engaged in the implementation and versed in the knowledge of how it relates to the design of the entire database system.

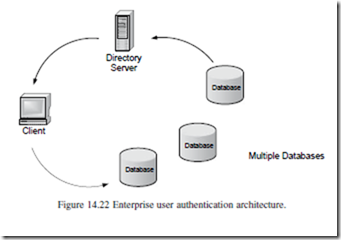

3. Enterprise User Management: this feature enables the storing of database users with their related administrative and security information in a central directory server. This is accomplished by having a separate database server that authenticates a user by accessing the information stored in a directory (Figure 14.22).

This configuration enables the administrator to modify information in one location and provides a more secure and lower cost solution.

Version Control

Version control can be defined as a tracking and managing of changes to information. Version control has become an important database issue particularly with Internet applications and e-business operations. Internet applications often have user editable data that is stored in a database. Thus, it is an advantage to provide version control to this stored information in case of a mistake or for “audit trail” and integrity purposes. That is, audit trail is an accounting and security process of having a “trail” of changes that are made. The amount of historical changes in audit trail can vary, although for the purposes of this book I will confine the trail to the last change made—in other words, having the last change stored for historical tracking. However, audit trail and security are not the only reasons to implement version control. Version control provides an error fixing capability. For example, if a user enters a new value in a lookup table and then decides it is in error, version control can allow it to be reset back to its original value. This is similar to applications like Microsoft Word which contain an “undo” function that allows users to go back to an original setting.

Database version controls are usually by designing what is known as “shadow tables,” which store the last value changed by a user (see Figure 14.23).

The use of version control obviously creates potential performance problems and space issues because of the expanded number of tables. The decision of how many historical values to store also becomes an important decision to be made by analysts, designers, and users. Most important is to understand the trade-offs to these decisions and for users and system architects to recognize the impact of such decisions.

There are also third-party products that provide functions for version control, as well as mathematical formulas that can be used to minimize space needs when implementing shadow tables. Ultimately the best approach is to work closely with users and determine what fields require versions to address user error, and with audit firms to determine the extent of audit trails for security purposes.

Comments

Post a Comment