Overview of Analysis Tools:The Concept of the Logical Equivalent

Overview of Analysis Tools

The Concept of the Logical Equivalent

The primary mission of an analyst or systems designer is to extract the physical requirements of the users and convert them to software. All software can trace its roots to a physical act or a physical requirement. A physical act can be defined as something that occurs in the interaction of people, that is, people create the root requirements of most systems, especially those in business. For example, when Mary tells us that she receives invoices from vendors and pays them thirty days later, she is explaining her physical activities during the process of receiving and paying invoices. When the analyst creates a technical specification which represents Mary’s physical requirements, the specification is designed to allow for the translation of her physical needs into an automated environment. We know that software must operate within the confines of a computer, and such systems must function on the basis of logic. The logical solution does not always treat the process using the same procedures employed in the physical world. In other words, the software system implemented to provide the functions which Mary does physically will probably work differently and more efficiently than Mary herself. Software, therefore, can be thought of as a logical equivalent of the physical world. This abstraction, which I call the concept of the logical equivalent (LE), is a process that analysts must use to create effective requirements of the needs of a system. The LE can be compared to a schematic of a plan or a diagram of how a technical device works.

Your success in creating a concise and accurate schematic of the software that needs to be developed by a programmer will be directly proportional to how well you master the concept of the Logical Equivalent. Very often requirements are developed by analysts using various methods that do not always contain a basis for consistency, reconciliation and maintenance. There is usually far too much prose used as opposed to specific diagramming standards that are employed by engineers. After all, we are engineering a system through the development of software applications. The most critical step in obtaining the LE is the understanding of the process of Functional Decomposition. Functional Decomposition is the process for finding the most basic parts of a system, like defining all the parts of a car so that it can be built. It would be possible not from looking at a picture of the car, but rather at a schematic of all the functionally decomposed parts. Developing and engineering software is no different.

Below is an example of an analogous process using functional decomposition, with its application to the LE:

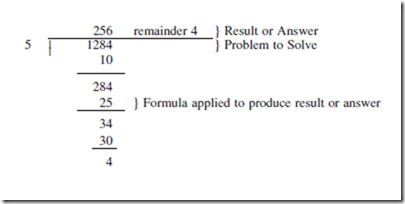

In obtaining the physical information from the user, there are a number of modeling tools that can be used. Each tool provides a specific function to derive the LE. The word “derive” has special meaning here. It relates to the process of Long Division, or the process or formula we apply when dividing one number by another. Consider the following example:

The above example shows the formula that is applied to a division problem. We call this formula long division. It provides the answer, and if we change any portion of the problem, we simply re-apply the formula and generate a new result. Most important, once we have obtained the answer, the value of the formula steps is only one of documentation. That is, if someone questioned the validity of the result, we could show them the formula to prove that the answer was correct (based on the input).

Now let us apply long division to obtaining the LE via functional decomposition. The following is a result of an interview with Joe, a bookkeeper, about his physical procedure for handling bounced checks.

Joe the bookkeeper receives bounced checks from the bank. He fills out a Balance Correction Form and forwards it to the Correction Department so that the outstanding balance can be corrected. Joe sends a bounced check letter to the customer requesting a replacement check plus a $15.00 penalty (this is now included as part of the outstanding balance). Bounced checks are never re-deposited.

The appropriate modeling tool to use in this situation is a Data Flow Diagram (DFD). A DFD is a tool that shows how data enters and leaves a particular process. The process we are looking at with Joe is the handling of the bounced check. A DFD has four possible components:

Now let us draw the LE of Joe’s procedure using DFD tools:

Figure 4.1 Data flow diagram for handling bounced checks.

The above DFD in Figure 4.1 shows that bounced checks arrive from the bank, the Account Master file is updated, the Correction Department is informed and Customers receive a letter. The Bank, Correction Department and Customers are considered “outside” the system and are therefore represented logically as

Figure 4.2 Level 2 data flow diagram for handling bounced checks.

Externals. This diagram is considered to be at the first level or “Level 1” of functional decomposition. You will find that all modeling tools employ a method to functionally decompose. DFDs use a method called “Leveling.”

The question is whether we have reached the most basic parts of this process or should we level further. Many analysts suggest that a fully decomposed DFD should have only one data flow input and one data flow output. Our diagram currently has many inputs and outputs and therefore it can be leveled further. The result of functionally decomposing to the second level (Level 2) is as shown in Figure 4.2.

Notice that the functional decomposition shows us that Process 1: Handling Bounced Checks is really made up of two sub-processes called 1.1 Update Balance and 1.2 Send Letter. The box surrounding the two processes within the Externals reflects them as components of the previous or parent level. The double sided arrow in Level 1 is now broken down to two separate arrows going in different directions because it is used to connect Processes 1.1 and 1.2. The new level is more functionally decomposed and a better representation of the LE. Once again we must ask ourselves whether Level 2 can be further decom- posed. The answer is yes. Process 1.1 has two outputs to one input. On the other hand, Process 1.2 has one input and one output and is therefore complete.

Process 1.2 is said to be at the Functional Primitive, a DFD that cannot be decomposed further. Therefore, only 1.1 will be decomposed.

Let us decompose 1.1 in Figure 4.3 as follows:

Process 1.1 is now broken down into two sub processes: 1.1.1 Update Account Master and 1.1.2 Inform Correction Department. Process 1.1.2 is a Functional Primitive since it has one input and one output. Process 1.1.1 is also considered a Functional Primitive because the “Bounced Check Packet” flow is between the

Figure 4.3 Level 3 data flow diagram for handling bounced checks.

two processes and is used to show connectivity only. Functional decomposition is at Level-3 and is now complete.

The result of functional decomposition is the following DFD in Figure 4.4:

Figure 4.4 Functionally decomposed level-3 data flow diagram for handling bounced checks.

As in long division, only the complete result, represented above, is used as the answer. The preceding steps are formulas that we use to get to the lowest, simplest representation of the logical equivalent. Levels 1, 2 and 3 are used only for documentation of how the final DFD was determined.

The logical equivalent is an excellent method that allows analysts and systems designers to organize information obtained from users and to systematically derive the most fundamental representation of their process. It also alleviates unnecessary pressure to immediately understand the detailed flows and provides documentation of how the final schematic was developed.

Tools of Structured Analysis

Now that we have established the importance and goals of the logical equivalent, we can turn to a discussion of the methods available to assist the analyst. These methods serve as the tools to create the best models in any given situation, and thus the most exact logical equivalent. The tools of the analyst are something like those of a surgeon, who uses only the most appropriate instruments during an operation. It is important to understand that the surgeon is sometimes faced with choices about which surgical instruments to use; particularly with new procedures, there is sometimes disagreement among surgeons about which instruments are the most effective. The choice of tools for analysis and data processing is no different; indeed, it can vary more and be more confusing. The medical profession, like many others, is governed by its own ruling bodies. The American Medical Association and the American College of Physicians and Surgeons, as well as state and federal regulators, represent a source of standards for surgeons. Such a controlling body does not exist in the data processing industry, nor does it appear likely that one will arise in the near future. Thus, the industry has tried to standardize among its own leaders. The result of such efforts has usually been that the most dominant companies and organizations create standards to which others are forced to comply. For example, Microsoft has established itself as an industry leader by virtue of its software domination. Here, Might is Right!

Since there are no real formal standards in the industry, the analysis tools discussed here will be presented on the basis of both their advantages and their shortcomings. It is important then to recognize that no analysis tool (or methodology for that matter) can do the entire job, nor is any perfect at what it does. To determine the appropriate tool, analysts must fully understand the environment, the technical expertise of users and the time constraints imposed on the project. By “environment” we mean the existing system and technology, computer operations, and the logistics—both technically and geographically—of the new system. The treatment of the user interface should remain consistent with the guidelines discussed in Chapter 3.

The problem of time constraints is perhaps the most critical of all. The tools you would ideally like to apply to a project may not fit the time frame allotted. What happens, then, if there is not enough time? The analyst is now faced with selecting a second-choice tool that undoubtedly will not be as effective as the first one would have been. There is also the question of how tools are implemented, that is, can a hybrid of a tool be used when time constraints prevent full implementation of the desired tool?

Making Changes and Modifications

Within the subject of analysis tools is the component of maintenance modeling, or how to apply modeling tools when making changes or enhancements to an existing product. Maintenance modeling falls into two categories:

1. Pre-Modeled: where the existing system already has models that can be used to effect the new changes to the software.

2. Legacy System: where the existing system has never been modeled; any new modeling will therefore be incorporating analysis tools for the first time.

Pre-Modeled

Simply put, a Pre-Modeled product is already in a structured format. A structured format is one that employs a specific format and methodology such as the data flow diagram.

The most challenging aspects of changing Pre-Modeled tools are:

1. keeping them consistent with their prior versions, and

2. implementing a version control system that provides an audit-trail of the analysis changes and how they differ from the previous versions. Many professionals in the industry call this Version Control; however, care should be taken in specifying whether the version control is used for the maintenance of analysis tools. Unfortunately, Version Control can be used in other contexts, most notably in the tracking of program versions and software documentation. For these cases, special products exist in the market which provide special automated “version control” features. We are not concerned here with these products but rather with the procedures and processes that allow us to incorporate changes without losing the prior analysis documentation. This kind of procedure can be considered consistent with the long division example in which each time the values change, we simply re-apply the formula (methodology) to calculate the new answer. Analysis version control must therefore have the ability to take the modifications made to the software and integrate them with all the existing models as necessary.

Being Consistent

It is difficult to change modeling methods and/or CASE tools in the middle of the life cycle of a software product. One of our main objectives then is to try avoid doing so. How? Of course, the simple answer is to select the right tools and CASE software the first time. However, we all make mistakes, and more importantly, there are new developments in systems architecture that may make a new CASE product attractive. You would be wise to foresee this possibility and prepare for inconsistent tools implementation. The best offense here is to:

• ensure that your CASE product has the ability to transport models through an ASCII file or cut/paste method. Many have interfaces via an “export” function. Here, at least, the analyst can possibly convert the diagrams and data elements to another product.

• keep a set of diagrams and elements that can be used to establish a link going forward, that is, a set of manual information that can be reinput to another tool. This may be accomplished by simply having printed documentation of the diagrams; however, experience has shown that it is difficult to keep such information up to date. Therefore, the analyst should ensure that there is a procedure for printing the most current diagrams and data elements.

Should the organization decide to use different tools, e.g., process- dependency diagrams instead of data flow diagrams, or a different methodology such as crows’-foot method in Entity Relational Diagramming, then the analyst must implement a certain amount of reengineering. This means mapping the new modeling tools to the existing ones to ensure consistency and accuracy. This is no easy task, and it is strongly suggested that you document the diagrams so you can reconcile them.

Version Control

This book is not intended to focus on the generic aspects of version control; however, structured methods must have an audit trail. When a new process is changed, a directory should be created for the previous version. The directory name typically consists of the version and date such as: xyz1.21295, where xyz is the name of the product or program, 1.2 the version and 1295 the version date. In this way previous versions can be easily re-created or viewed. Of course, saving a complete set of each version may not be feasible or may be too expensive (in terms of disk space, etc.). In these situations, it is advisable to back up the previous version in such a manner as to allow for easy restoration. In any case, a process must exist, and it is crucial that there be a procedure to do backups periodically.

Legacy Systems

Legacy systems usually reside on mainframe computers and were developed using 3GL2 software applications, the most typical being COBOL. Unfortunately, few of these systems were developed using structured tools. Without question, these are the systems most commonly undergoing change today in many organi- zations. All software is comprised of two fundamental components: processes and data. Processes are the actual logic and algorithms required by the system. Data, on the other hand, represent the information that the processes store and use. The question for the analyst is how to obtain the equivalent processes and data from the legacy system. Short of considering a complete rewrite, there are two basic approaches: the data approach and the process approach.

The Data Approach

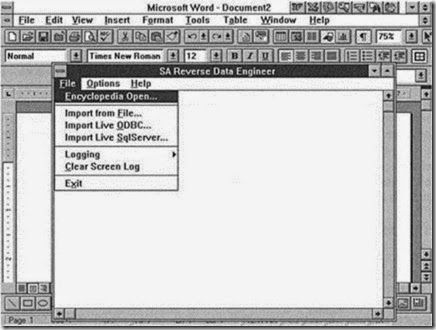

Conversion of legacy systems for purposes of structured changes requires the modeling of the existing programs to allow for the use of analysis tools. The first step in this endeavor is the modeling of the existing files and their related data structures. Many 3GL applications may not exist in a relational or other database format. In either case, it is necessary to collect all of the data elements that exist in the system. To accomplish this, the analyst will typically need a conversion program that will collect the elements and import them into a CASE tool. If the data are stored in a relational or database system, this can be handled through many CASE products via a process known as reverse engineering. Here, the CASE product will allow the analyst to select the incoming database, and the product will then automatically import the data elements. It is important to note that these reverse-engineering features require that the incoming database is SQL (Structured Query Language) compatible. Should the incoming data file be of a non-database format (i.e., a flat file3), then a conversion program will need to be used. Once again, most CASE tools will have features that will list the required input data format.

Figure 4.5 is a sample of the reengineering capabilities of the System Architect product by Popkin Software and Systems.

Once the data has been loaded into the CASE product, the various data models can be constructed. This process will be discussed further in Chapter 8.

Although we have shown above a tool for loading the data, the question still remains: How do we find all the data elements? Using COBOL as an example, one could take the following steps:

1. Identify all of the File Description (FD) tables in the COBOL appli- cation programs. Should they exist in an external software product like Librarian, the analyst can import the tables from that source. Otherwise the actual file tables will need to be extracted and converted from the COBOL application program source. This procedure is not difficult; it is simply another step that typically requires conversion utilities.

2. Scan all Working Storage Sections (see Figure 4.6) for data elements that are not defined in the File Description Section. This occurs very often when COBOL programmers do not define the actual elements until they are read into a Working Storage Section of the program. This situation, of course, requires further research, but it can be detected by scanning the code.

Figure 4.5 Reverse engineering using System Architect.

3. Look for other data elements that have been defined in Working Storage that should be stored as permanent data. Although this can be a difficult task, the worst case scenario would involve the storing of redundant data. Redundant data is discussed further in Chapter 5.

4. Once the candidate elements have been discovered, ensure that appropriate descriptions are added. This process allows the Analyst to actually start defining elements so that decisions can be made during logic data modeling (Chapter 6).

Figure 4.6 Working Storage Section of a COBOL program.

The Process Approach

The most effective way to model existing programs is the most direct way, that is, the old-fashioned way: start reading and analyzing the code. Although this approach may seem very crude, it is actually tremendously effective and productive. Almost all programming languages contain enough structure to allow for identification of input and output of files and reports. By identifying the data and reports, the analyst can establish a good data flow diagram as shown in Figure 4.7.

The above example shows two File Description (FD) tables defined in a COBOL program. The first table defines the input file layout of a payroll record, and the second is the layout of the output payroll report. This is translated into a DFD as shown in Figure 4.8.

In addition, depending on the program language, many input and output data and reports must be defined in the application code in specific sections of the program. Such is true in COBOL and many other 3GL products. Furthermore,

Figure 4.7 Input data and report layouts of a COBOL program.

Figure 4.8 Translated COBOL file descriptions into a data flow diagram.

many 4GL products force input and output verbs to be used when databases are manipulated. This is very relevant to any products that use embedded SQL to do input and output. The main point here is that you can produce various utility programs (“quick & dirty,” as they call them) to enhance the accuracy and speed of identifying an existing program’s input and output processing.

Once the flows in the data flow diagram are complete, the next step is to define the actual program logic, or what is typically called the process specifications (discussed later in this chapter). Once again there is no shortcut to save your going through the code program by program. There are some third-party software tools on the market that can provide assistance, but these tend to be very programming-language specific. Although the manual method may seem overwhelming, it can be accomplished successfully and will often provide great long-term benefits. Many companies will bring in part-time college interns and other clerical assistance to get this done. It should be noted that a CASE tool is almost always required to provide a place to document logic. This is especially important if the CASE tool will be used to generate actual program code.

Specification Formats

An ongoing challenge for many IS and software firms is determining what a good specification is. Many books written about analysis tools tend to avoid answering this question directly. When addressing the quality of a process specification, we must ultimately accept the inevitable prognosis that we can no longer be exclusively graphical. Although Yourdon and DeMarco4 argue that structured

Figure 4.9 Types of process specifications based on user computer literacy.

Figure 4.10 Sample Business Specification.

tools need to be graphical, the essence of a specification is in its ability to define the algorithms of the application program itself. It is therefore impossible to avoid writing a form of pseudocode. Pseudocode is essentially a generic representation of how the real code must execute. Ultimately, the analyst must be capable of developing clear and technically correct algorithms. The methods and styles of process specifications are outlined on the following pages. The level of technical complexity of these specifications varies based on a number of issues:

1. the technical competence of the analyst. Ultimately, the analyst must have the ability to write algorithms to be most effective.

2. the complexity of the specification itself. The size and scope of specifications will vary, and as a result so will the organization of the requirements.

3. the type of the specifications required. There are really two levels of specifications: business and programming. The business specification is very prose-oriented and is targeted for review by a non-technical user. Its main purpose is to gain confirmation from the user community prior to investment in the more specific and technical programming

Figure 4.11 Sample Programming Specification.

specification. The programming specification, on the other hand, needs to contain the technical definition of the algorithms and is targeted for technical designers and programmers.

4. the competence of the programming staff as well as the confidence that the analyst has in that staff. The size and capabilities of the programming resources will usually be directly proportional to the emphasis that the analyst will need to place on the specifics and quality needed for a process specification.

Although the alternative techniques for developing a Process Specification are further discussed in Chapters 5 and 14, the chart below should put into perspective the approach to deciding the best format and style of communication. It is important to understand that overlooking the process specification and its significance to the success of the project can have catastrophic effects on the entire development effort. Figure 4.9 reflects the type of specification suggested based on the technical skills of the users:

Figures 4.10 and 4.11 are sample business and programming specifications, respectively, that depict the flow from a user specification to the detailed program logic.

Problems and Exercises

1. Describe the concept of the logical equivalent as it relates to defining the requirements of a system.

2. What is the purpose of functional decomposition? How is the leveling of a DFD consistent with this concept?

3. How does Long Division depict the procedures of decomposition ?

Explain.

4. What is a legacy system ?

5. Processes and data represent the two components of any software application. Explain the alternative approaches to obtaining process and data information from a legacy system.

6. What is reverse engineering? How can it be used to build a new system?

7. Why is analysis version control important?

8. Explain the procedures for developing a DFD from an existing program.

9. What is the purpose of a process specification? Explain.

10. Outline the differences between a business specification and a programming specification. What is their relationship, if any?

Comments

Post a Comment